Unit 4 Social interactions

Themes and capstone units

A combination of self-interest, a regard for the wellbeing of others, and appropriate institutions can yield desirable social outcomes when people interact

- Game theory is a way of understanding how people interact based on the constraints that limit their actions, their motives, and their beliefs about what others will do.

- Experiments and other evidence show that self-interest, a concern for others, and a preference for fairness are all important motives that explain how people interact.

- In most interactions there is some conflict of interest between people, but also some opportunity for mutual gain.

- The pursuit of self-interest can sometimes lead to results that are considered good by all participants, or outcomes that none of the participants would prefer.

- Self-interest can be harnessed for the general good in markets, by governments limiting the actions that people are free to take, and by one’s peers imposing punishments on actions that lead to bad outcomes.

- A concern for others and for fairness allows us to internalize the effects of our actions on others, and so can contribute to good social outcomes.

The scientific evidence is now overwhelming: climate change presents very serious global risks, and it demands an urgent global response.1

This is the blunt beginning of the executive summary of the Stern Review, published in 2006. The British Chancellor of the Exchequer (finance minister) commissioned a group of economists, led by former World Bank chief economist Sir Nicholas (now Lord) Stern, to assess the evidence for climate change, and to try to understand its economic implications. The Stern Review predicts that the benefits of early action to slow climate change will outweigh the costs of neglecting the issue.

The Fifth Assessment Report by the Intergovernmental Panel on Climate Change (IPCC) agrees. Early action would mean a significant cut in greenhouse gas emissions, by reducing our consumption of energy-intensive goods, a switch to different energy technologies, reducing the impacts of agriculture and land-use change, and an improvement in the efficiency of current technologies.2

But none of this will happen if we pursue what Stern referred to as ‘business as usual’: a scenario in which people, governments and businesses are free to pursue their own pleasures, politics, and profits without taking adequate account of the effect of their actions on others, including future generations.

National governments disagree on the policies that should be adopted. Many nations in the developed world are pressing for strict global controls on carbon emissions, while others, whose economic catch-up has until recently been dependent on coal-burning technologies, have resisted these measures.

- social dilemma

- A situation in which actions taken independently by individuals in pursuit of their own private objectives result in an outcome which is inferior to some other feasible outcome that could have occurred if people had acted together, rather than as individuals.

The problem of climate change is far from unique. It is an example of what is called a social dilemma. Social dilemmas—like climate change—occur when people do not take adequate account of the effects of their decisions on others, whether these are positive or negative.

Social dilemmas occur frequently in our lives. Traffic jams happen when our choice of a way to get around—for example driving alone to work rather than car-pooling—does not take account of the contribution to congestion that we make. Similarly, overusing antibiotics for minor illnesses may help the sick person who takes them recover more quickly, but creates antibiotic-resistant bacteria that have a much more harmful effect on many others.

The Tragedy of the Commons

In 1968, Garrett Hardin, a biologist, published an article about social dilemmas in the journal Science, called ‘The Tragedy of the Commons’. He argued that resources that are not owned by anyone (sometimes called ‘common property’ or ‘common-pool resources’) such as the earth’s atmosphere or fish stocks, are easily overexploited unless we control access in some way. Fishermen as a group would be better off not catching as much tuna, and consumers as a whole would be better off not eating too much of it. Humanity would be better off by emitting less pollutants, but if you, as an individual, decide to cut your consumption, your carbon footprint or the number of tuna you catch will hardly affect the global levels.3

- free ride

- Benefiting from the contributions of others to some cooperative project without contributing oneself.

Examples of Hardin’s tragedies and other social dilemmas are all around us: if you live with roommates, or in a family, you know just how difficult it is to keep a clean kitchen or bathroom. When one person cleans, everyone benefits, but it is hard work. Whoever cleans up bears this cost. The others are sometimes called free riders. If as a student you have ever done a group assignment, you understand that the cost of effort (to study the problem, gather evidence, or write up the results) is individual, yet the benefits (a better grade, higher class standing, or simply the admiration of classmates) go to the whole group.4

Resolving social dilemmas

There is nothing new about social dilemmas; we have been facing them since prehistory.

- altruism

- The willingness to bear a cost in order to benefit somebody else.

More than 2,500 years ago, the Greek storyteller Aesop wrote about a social dilemma in his fable Belling the Cat. A group of mice needs one of its members to place a bell around a cat’s neck. Once the bell is on, the cat cannot catch and eat the other mice. But the outcome may not be so good for the mouse that takes the job.5 There are countless examples during wars or natural catastrophes in which individuals sacrifice their lives for others who are not family members, and may even be total strangers. These actions are termed altruistic.

Altruistic self-sacrifice is not the most important way that societies resolve social dilemmas and reduce free riding. Sometimes the problems can be resolved by government policies. For example, governments have successfully imposed quotas to prevent the over-exploitation of stocks of cod in the North Atlantic. In the UK, the amount of waste that is dumped in landfills, rather than being recycled, has been dramatically reduced by a landfill tax.

Local communities also create institutions to regulate behaviour. Irrigation communities need people to work to maintain the canals that benefit the whole community. Individuals also need to use scarce water sparingly so that other crops will flourish, although this will lead to smaller crops for themselves. In Valencia, Spain, communities of farmers have used a set of customary rules for centuries to regulate communal tasks and to avoid using too much water. Since the middle ages they have had an arbitration court called the Tribunal de las Aguas (Water Court) that resolves conflicts between farmers about the application of the rules. The ruling of the Tribunal is not legally enforceable. Its power comes only from the respect of the community, yet its decisions are almost universally followed.

- game theory

- A branch of mathematics that studies strategic interactions, meaning situations in which each actor knows that the benefits they receive depend on the actions taken by all. See also: game.

- social interactions

- Situations in which the actions taken by each person affect other people’s outcomes as well as their own.

Even present-day global environmental problems have sometimes been tackled effectively. The Montreal Protocol has been remarkably successful. It was created to phase out and eventually ban the chlorofluorocarbons (CFCs) that threatened to destroy the ozone layer that protects us against harmful ultraviolet radiation.

In this unit, we will use the tools of game theory to model social interactions, in which the decisions of individuals affect other people as well as themselves. We will look at situations that result in social dilemmas and how people can sometimes solve them—but sometimes not (or not yet), as in the case of climate change.

But not all social interactions lead to social dilemmas, even if individuals act in pursuit of their own interests. We will start in the next section with an example where the ‘invisible hand’ of the market, as described by Adam Smith, channels self-interest so that individuals acting independently do reach a mutually beneficial outcome.

Exercise 4.1 Social dilemmas

Using the news headlines from last week:

- Identify two social dilemmas that have been reported (try to use examples not discussed above).

- For each, specify how it satisfies the definition of a social dilemma.

4.1 Social interactions: Game theory

On which side of the road should you drive? If you live in Japan, the UK, or Indonesia, you drive on the left. If you live in South Korea, France, or the US, you drive on the right. If you grew up in Sweden, you drove on the left until 5 p.m. on 3 September 1967, and at 5.01 p.m. you started driving on the right. The government sets a rule, and we follow it.

But suppose we just left the choice to drivers to pursue their self-interest and to select one side of the road or the other. If everyone else was already driving on the right, self-interest (avoiding a collision) would be sufficient to motivate a driver to drive on the right as well. Concern for other drivers, or a desire to obey the law, would not be necessary.

Devising policies to promote people’s wellbeing requires an understanding of the difference between situations in which self-interest can promote general wellbeing, and cases in which it leads to undesirable results. To analyse this, we will introduce game theory, a way of modelling how people interact.

In Unit 3 we saw how a student deciding how much to study and a farmer choosing how hard to work both faced a set of feasible options, determined by a production function. This person then makes decisions to obtain the best possible outcome. But in the models we have studied so far, the outcome did not depend on what anyone else did. Neither the student nor the farmer was engaged in a social interaction.

Social and strategic interactions

- strategic interaction

- A social interaction in which the participants are aware of the ways that their actions affect others (and the ways that the actions of others affect them).

- strategy

- An action (or a course of action) that a person may take when that person is aware of the mutual dependence of the results for herself and for others. The outcomes depend not only on that person’s actions, but also on the actions of others.

- game

- A model of strategic interaction that describes the players, the feasible strategies, the information that the players have, and their payoffs. See also: game theory.

In this unit, we consider social interactions, meaning situations in which there are two or more people, and the actions taken by each person affects both their own outcome and other people’s outcomes. For example, one person’s choice of how much to heat his or her home will affect everyone’s experience of global climate change.

We use four terms:

- When people are engaged in a social interaction and are aware of the ways that their actions affect others, and vice versa, we call this a strategic interaction.

- A strategy is defined as an action (or a course of action) that a person may take when that person is aware of the mutual dependence of the results for herself and for others. The outcomes depend not only on that person’s actions, but also on the actions of others.

- Models of strategic interactions are described as games.

- Game theory is a set of models of strategic interactions. It is widely used in economics and elsewhere in the social sciences.

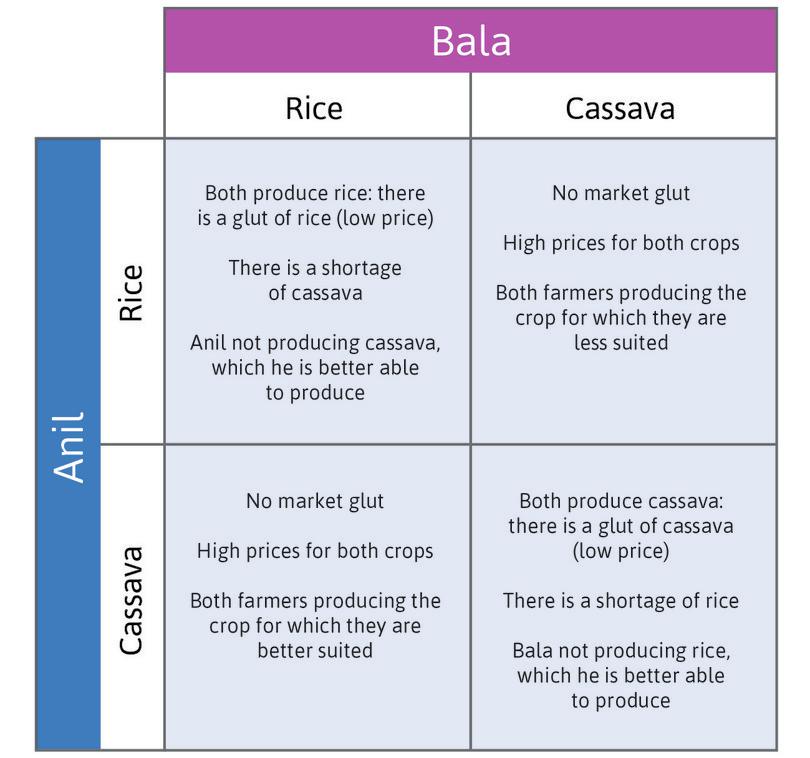

To see how game theory can clarify strategic interactions, imagine two farmers, who we will call Anil and Bala. They face a problem: should they grow rice or cassava? We assume that they have the ability to grow both types of crop, but can only grow one type at a time.

- division of labour

- The specialization of producers to carry out different tasks in the production process. Also known as: specialization.

Anil’s land is better suited for growing cassava, while Bala’s is better suited for rice. The two farmers have to determine the division of labour, that is, who will specialize in which crop. They decide this independently, which means they do not meet together to discuss a course of action.

(Assuming independence may seem odd in this model of just two farmers, but later we apply the same logic to situations like climate change, in which hundreds or even millions of people interact, most of them total strangers to one another. So assuming that Anil and Bala do not come to some common agreement before taking action is useful for us.)

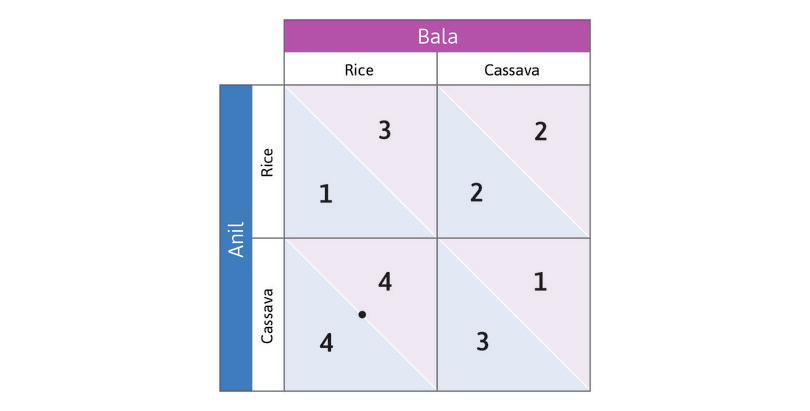

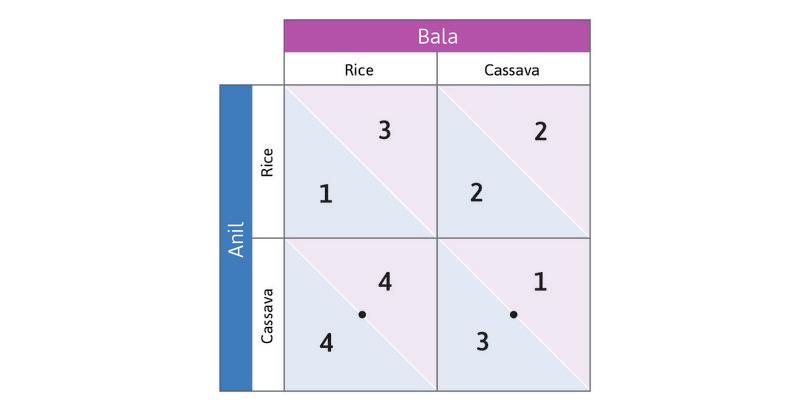

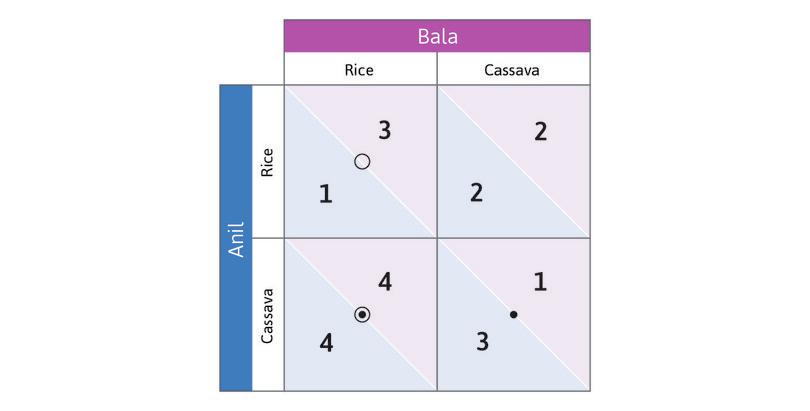

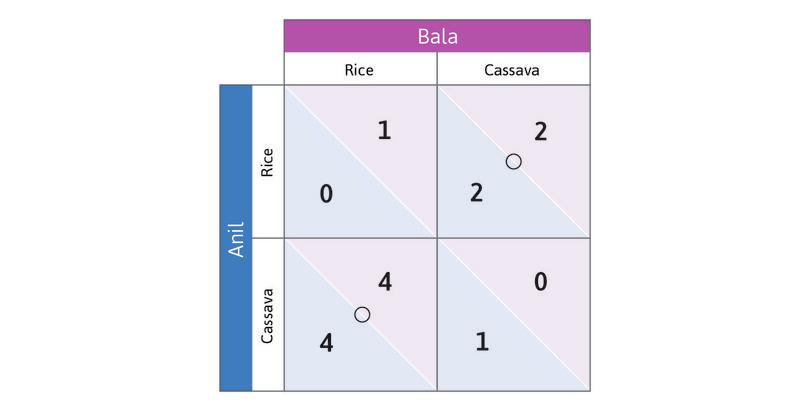

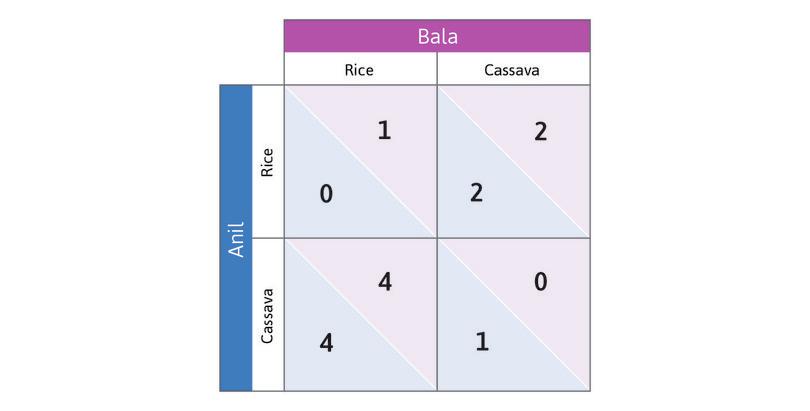

They both sell whatever crop they produce in a nearby village market. On market day, if they bring less rice to the market, the price will be higher. The same goes for cassava. Figure 4.1 describes their interaction, which is what we call a game. Let’s explain what Figure 4.1 means, because you will be seeing this a lot.

Game

A description of a social interaction, which specifies:

- The players: Who is interacting with whom

- The feasible strategies: Which actions are open to the players

- The information: What each player knows when making their decision

- The payoffs: What the outcomes will be for each of the possible combinations of actions

Anil’s choices are the rows of the table and Bala’s are the columns. We call Anil the ‘row player’ and Bala the ‘column player’.

When an interaction is represented in a table like Figure 4.1, each entry describes the outcome of a hypothetical situation. For example, the upper-left cell should be interpreted as:

Suppose (for whatever reason) Anil planted rice and Bala planted rice too. What would we see?

There are four possible hypothetical situations. Figure 4.1 describes what would happen in each case.

To simplify the model, we assume that:

- There are no other people involved or affected in any way.

- The selection of which crop to grow is the only decision that Anil and Bala need to make.

- Anil and Bala will interact just once (this is called a ‘one-shot game’).

- They decide simultaneously. When a player makes a decision, that player doesn’t know what the other person has decided to do.

- payoff

- The benefit to each player associated with the joint actions of all the players.

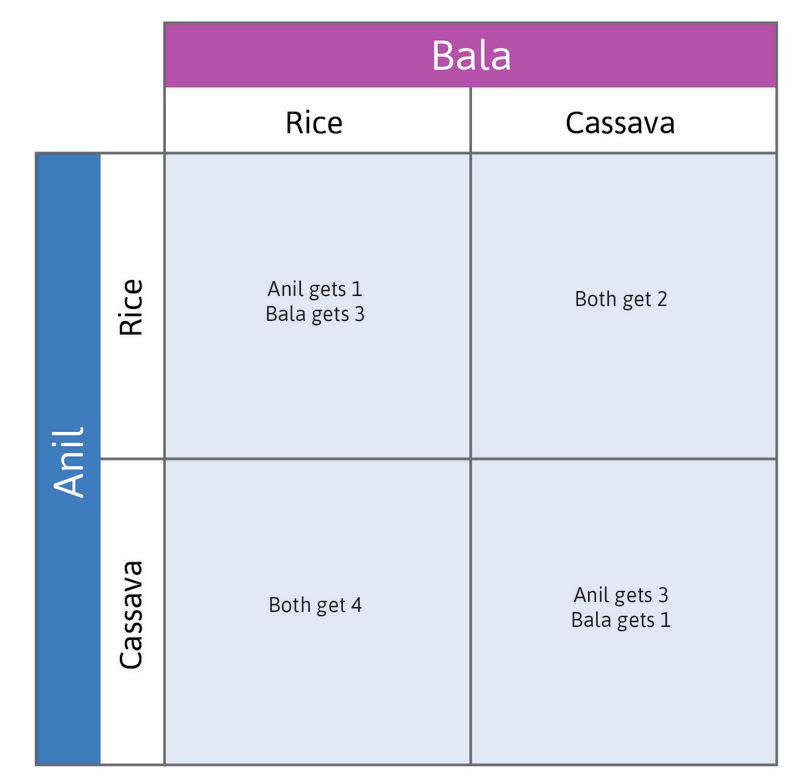

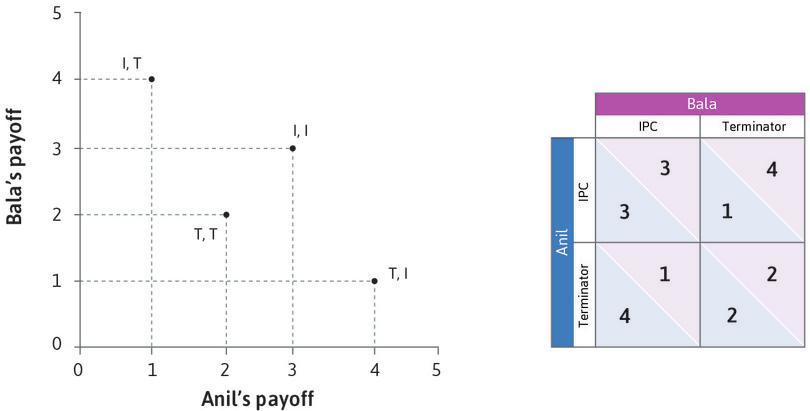

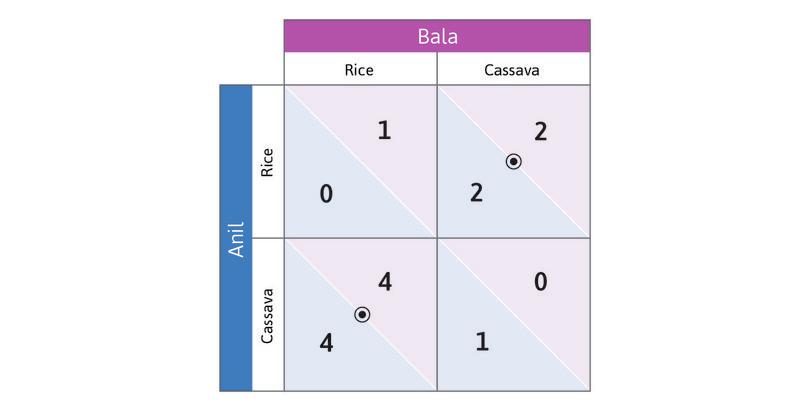

Figure 4.2a shows the payoffs for Anil and Bala in each of the four hypothetical situations—the incomes they would receive if the hypothetical row and column actions were taken. Since their incomes depend on the market prices, which in turn depend on their decisions, we have called this an ‘invisible hand’ game.

- Because the market price falls when it is flooded with one crop, they can do better if they specialize compared to when they both produce the same good.

- When they produce different goods they would both do better if each person specialized in the crop that was most suitable for their land.

Question 4.1 Choose the correct answer(s)

In a simultaneous one-shot game:

- A simultaneous game (as opposed to a sequential game) means that players all make a decision on their action simultaneously.

- In a one-shot game (as opposed to a repeated game), there is no ‘future’. The actions are taken only once.

- The players take actions non-cooperatively, driven by self-interest.

- An essential element of strategic games is that each player takes into account the possible actions of other players, when the actual choices made are unknown.

4.2 Equilibrium in the invisible hand game

- best response

- In game theory, the strategy that will give a player the highest payoff, given the strategies that the other players select.

Game theory describes social interactions, but it may also provide predictions about what will happen. To predict the outcome of a game, we need another concept: best response. This is the strategy that will give a player the highest payoff, given the strategies the other players select.

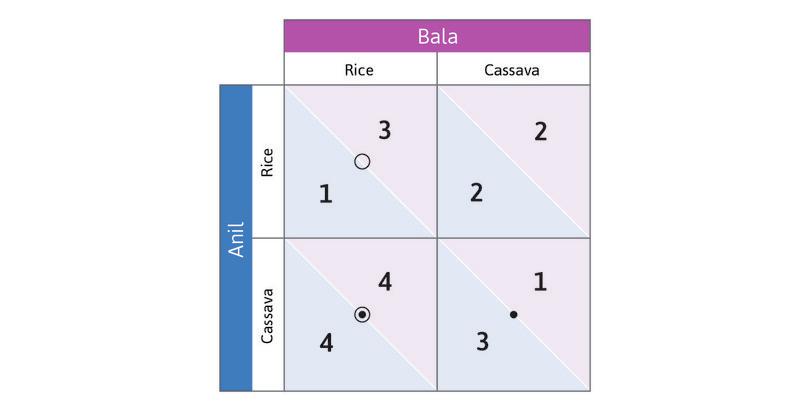

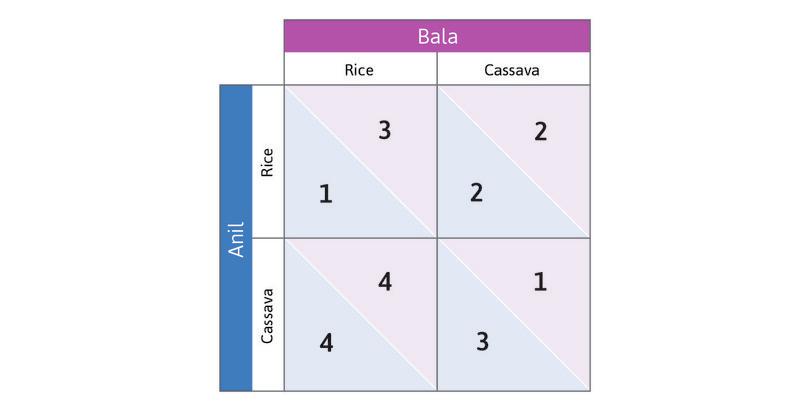

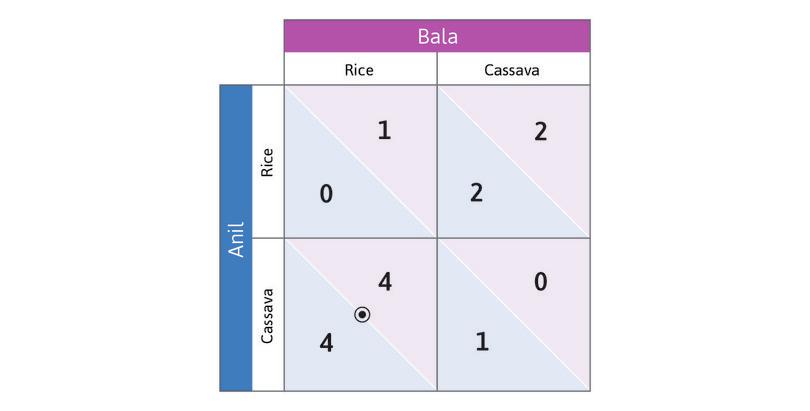

In Figure 4.2b we represent the payoffs for Anil and Bala in the invisible hand game using a standard format called a payoff matrix. A matrix is just any rectangular (in this case square) array of numbers. The first number in each box is the reward received by the row player (whose name begins with A as a reminder that his payoff is first). The second number is the column player’s payoff.

Think about best responses in this game. Suppose you are Anil, and you consider the hypothetical case in which Bala has chosen to grow rice. Which response yields you the higher payoff? You would grow cassava (in this case, you—Anil—would get a payoff of 4, but you would get a payoff of only 1 if you grew rice instead).

- dominant strategy

- Action that yields the highest payoff for a player, no matter what the other players do.

Work through the steps in Figure 4.2b to see that choosing Cassava is also Anil’s best response if Bala chooses Cassava. So Cassava is Anil’s dominant strategy: it will give him the highest payoff, whatever Bala does. And you will see that in this game Bala also has a dominant strategy. The analysis also gives you a handy method for keeping track of best responses by placing dots and circles in the payoff matrix.

Because both players have a dominant strategy, we have a simple prediction about what each will do: play their dominant strategy. Anil will grow cassava, and Bala will grow rice.

- dominant strategy equilibrium

- An outcome of a game in which every player plays his or her dominant strategy.

This pair of strategies is a dominant strategy equilibrium of the game.

Remember from Unit 2 that an equilibrium is a self-perpetuating situation. Something of interest does not change. In this case, Anil choosing Cassava and Bala choosing Rice is an equilibrium because neither of them would want to change their decision after seeing what the other player chose.

If we find that both players in a two-player game have dominant strategies, the game has a dominant strategy equilibrium. As we will see later, this does not always happen. But when it does, we predict that these are the strategies that will be played.

Because both Anil and Bala have a dominant strategy, their choice of crop is not affected by what they expect the other person to do. This is similar to the models in Unit 3 in which Alexei’s choice of hours of study, or Angela’s working hours, did not depend on what others did. But here, even though the decision does not depend on what the others do, the payoff does. For example, if Anil is playing his dominant strategy (Cassava) he is better off if Bala plays Rice than if Bala plays Cassava as well.

In the dominant strategy equilibrium Anil and Bala have specialized in producing the good for which their land is better suited. Simply pursuing their self-interest—choosing the strategy for which they got the highest payoff—resulted in an outcome that was:

- the best of the four possible outcomes for each player

- the strategy that yielded the largest total payoffs for the two farmers combined

In this example, the dominant strategy equilibrium is the outcome that each would have chosen if they had a way of coordinating their decisions. Although they independently pursued their self-interest, they were guided ‘as if by an invisible hand’ to an outcome that was in both of their best interests.

Real economic problems are never this simple, but the basic logic is the same. The pursuit of self-interest without regard for others is sometimes considered to be morally bad, but the study of economics has identified cases in which it can lead to outcomes that are socially desirable. There are other cases, however, in which the pursuit of self-interest leads to results that are not in the self-interest of any of the players. The prisoners’ dilemma game, which we study next, describes one of these situations.

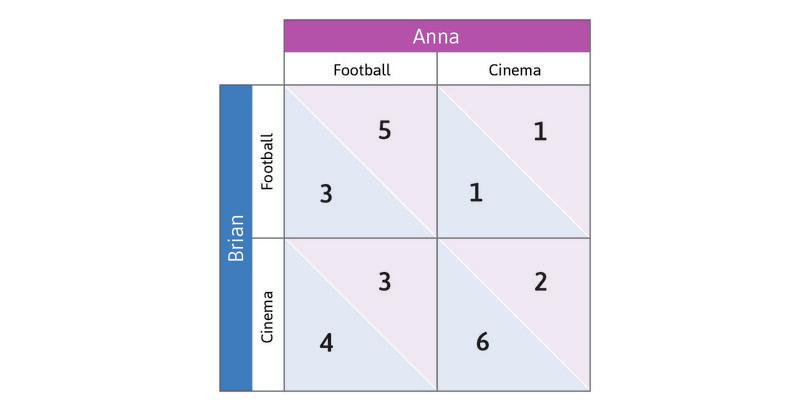

Question 4.2 Choose the correct answer(s)

Brian likes going to the cinema more than watching football. Anna, on the other hand, prefers watching football to going to the cinema. Either way, they both prefer to be together rather than spending an afternoon apart. The following table represents the happiness levels (payoffs) of Anna and Brian, depending on their choice of activity (the first number is Brian’s happiness level while the second number is Anna’s):

Based on the information above, we can conclude that:

- For Brian, the dominant strategy is Cinema.

- The dominant strategy equilibrium is the outcome in which each player plays his/her dominant strategy. In this game it is (Cinema, Football), with the payoff (4, 3).

- Anna would attain the highest happiness level if they could go to the cinema together. Similarly, Brian would be happiest if they both watched football.

- (Cinema, Football) is a dominant strategy equilibrium. The lack of any incentive to deviate is a feature of any dominant strategy equilibrium.

When economists disagree Homo economicus in question: Are people entirely selfish?

For centuries, economists and just about everyone else have debated whether people are entirely self-interested or are sometimes happy to help others even when it costs them something to do so. Homo economicus (economic man) is the nickname given to the selfish and calculating character that you find in economics textbooks. Have economists been right to imagine homo economicus as the only actor on the economic stage?

In the same book in which he first used the phrase ‘invisible hand’, Adam Smith also made it clear that he thought we were not homo economicus: ‘How selfish soever man may be supposed, there are evidently some principles in his nature which interest him in the fortunes of others, and render their happiness necessary to him, though he derives nothing from it except the pleasure of seeing it.’ (The Theory of Moral Sentiments, 1759)

But most economists since Smith have disagreed. In 1881, Francis Edgeworth, a founder of modern economics, made this perfectly clear in his book Mathematical Psychics: ‘The first principle of economics is that every agent is actuated only by self-interest.’6

Yet everyone has experienced, and sometimes even performed, great acts of kindness or bravery on behalf of others in situations in which there was little chance of a reward. The question for economists is: should the unselfishness evident in these acts be part of how we reason about behaviour?

Some say ‘no’: many seemingly generous acts are better understood as attempts to gain a favourable reputation among others that will benefit the actor in the future.

Maybe helping others and observing social norms is just self-interest with a long time horizon. This is what the essayist H. L. Mencken thought: ‘conscience is the inner voice which warns that somebody may be looking.’7

Since the 1990s, in an attempt to resolve the debate on empirical grounds, economists have performed hundreds of experiments all over the world in which the behaviour of individuals (students, farmers, whale hunters, warehouse workers, and CEOs) can be observed as they make real choices about sharing, using economic games.

- reciprocity

- A preference to be kind or to help others who are kind and helpful, and to withhold help and kindness from people who are not helpful or kind.

- inequality aversion

- A dislike of outcomes in which some individuals receive more than others.

In these experiments, we almost always see some self-interested behaviour. But we also observe altruism, reciprocity, aversion to inequality, and other preferences that are different from self-interest. In many experiments homo economicus is the minority. This is true even when the amounts being shared (or kept for oneself) amount to many days’ wages.

Is the debate resolved? Many economists think so and now consider people who are sometimes altruistic, sometimes inequality averse, and sometimes reciprocal, in addition to homo economicus. They point out that the assumption of self-interest is appropriate for many economic settings, like shopping or the way that firms use technology to maximize profits. But it’s not as appropriate in other settings, such as how we pay taxes, or why we work hard for our employer.

4.3 The prisoners’ dilemma

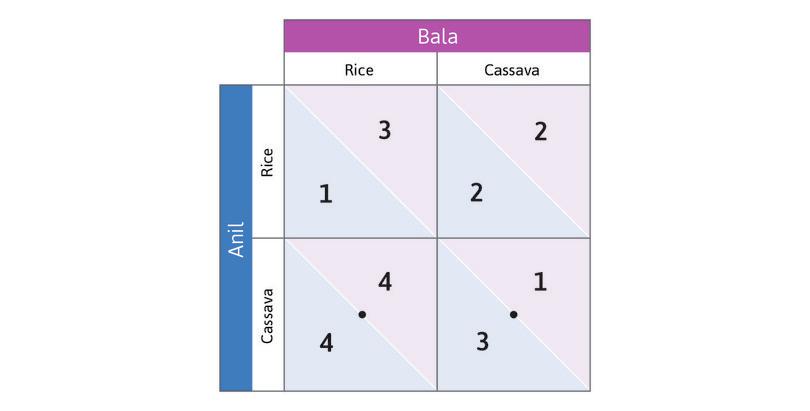

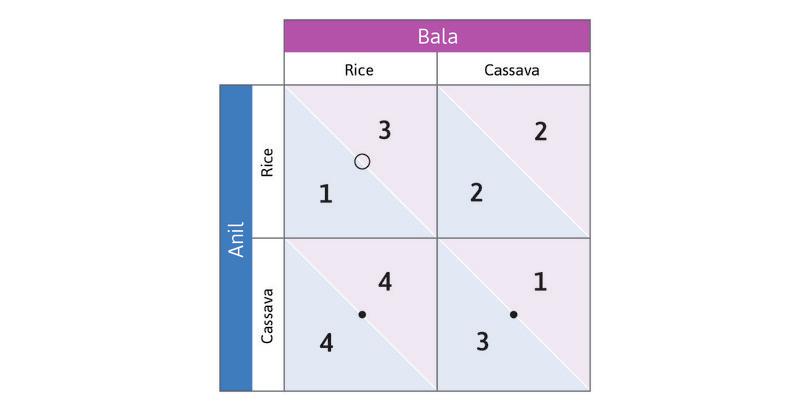

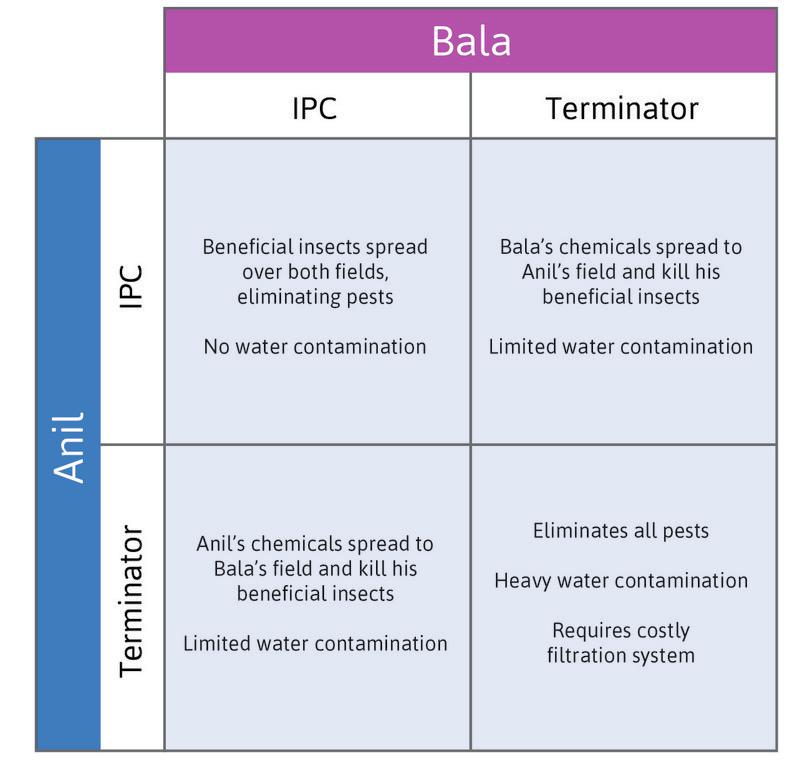

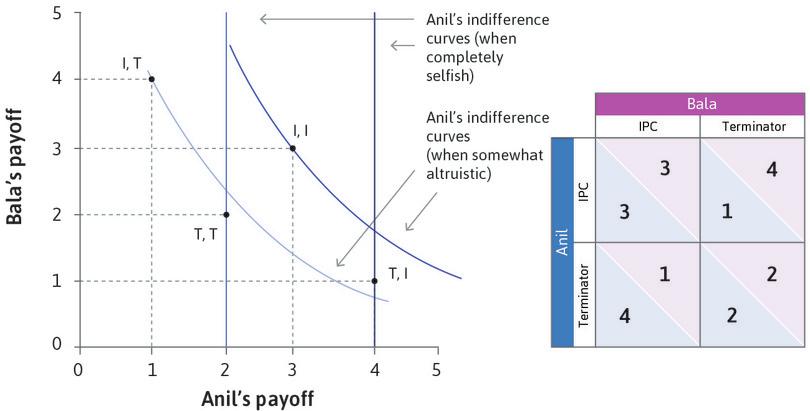

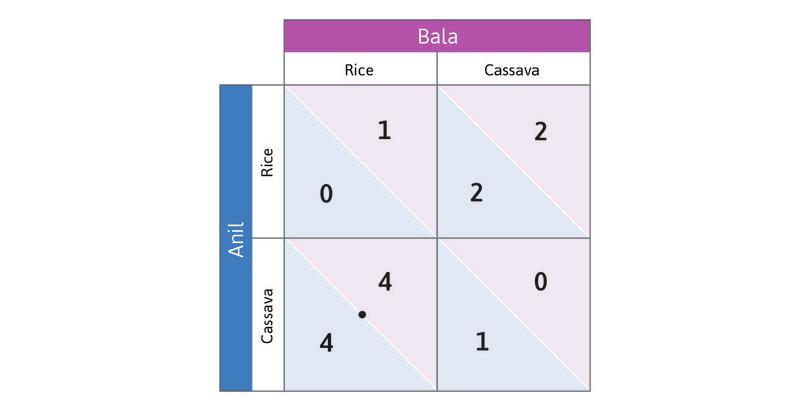

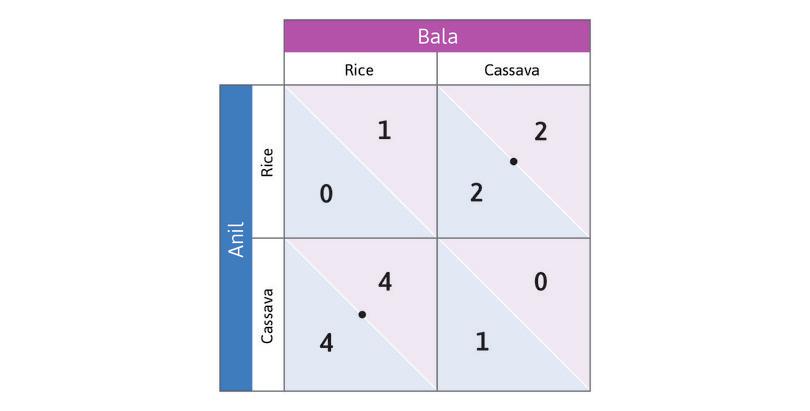

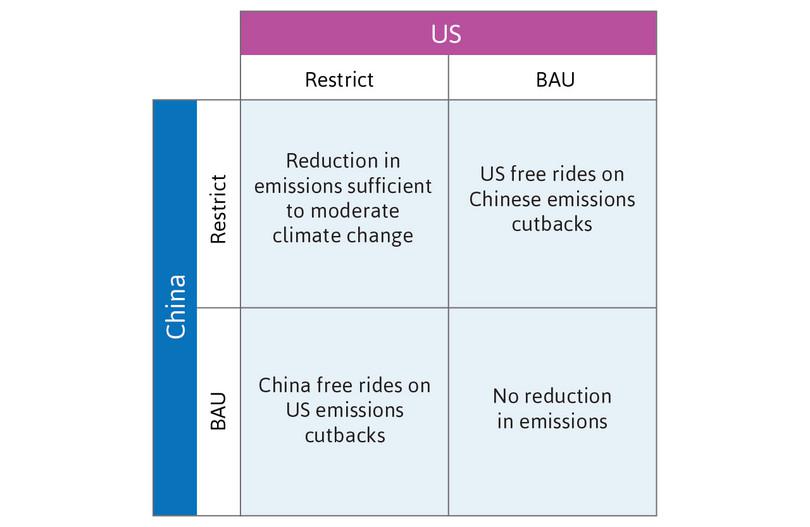

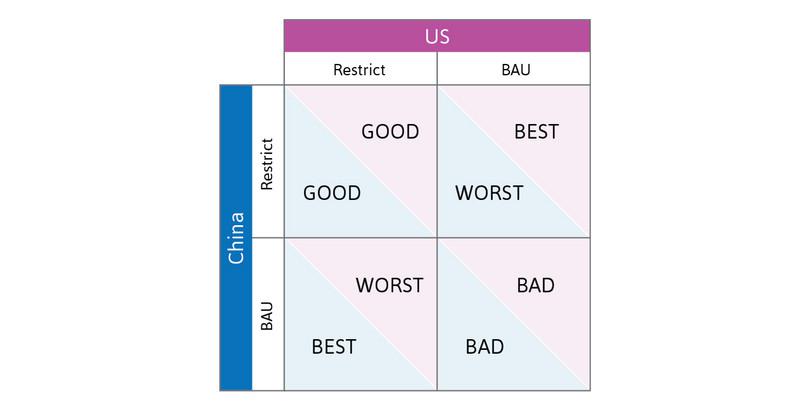

Imagine that Anil and Bala are now facing a different problem. Each is deciding how to deal with pest insects that destroy the crops they cultivate in their adjacent fields. Each has two feasible strategies:

- The first is to use an inexpensive chemical called Terminator. It kills every insect for miles around. Terminator also leaks into the water supply that they both use.

- The second is to use integrated pest control (IPC) instead of a chemical. A farmer using IPC introduces beneficial insects to the farm. The beneficial insects eat the pest insects.

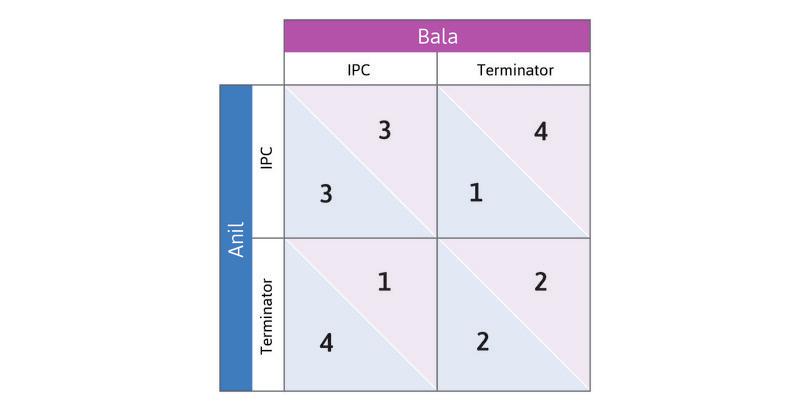

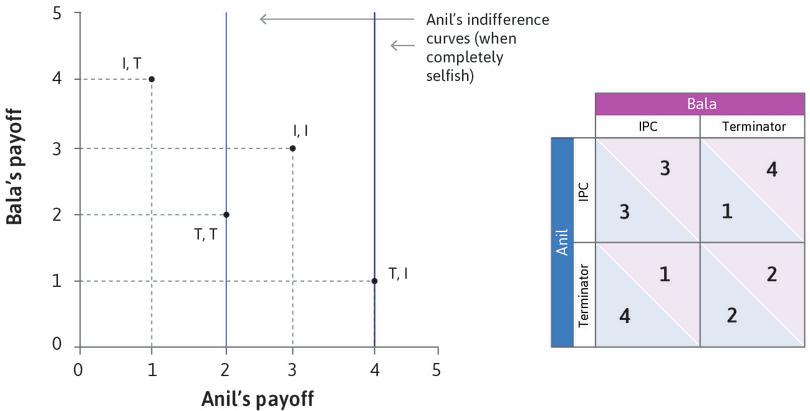

If just one of them chooses Terminator, the damage is quite limited. If they both choose it, water contamination becomes a serious problem, and they need to buy a costly filtering system. Figures 4.3a and 4.3b describe their interaction.

Both Anil and Bala are aware of these outcomes. As a result, they know that their payoff (the amount of money they will make at harvest time, minus the costs of their pest control strategy and the installation of water filtration if that becomes necessary), will depend not only on what choice they make, but also on the other’s choice. This is a strategic interaction.

How will they play the game? To figure this out, we can use the same method as in the previous section (draw the dots and circles in the payoff matrix for yourself).

Anil’s best responses:

- If Bala chooses IPC: Terminator (cheap eradication of pests, with little water contamination).

- If Bala chooses Terminator: Terminator (IPC costs more and cannot work since Bala’s chemicals will kill beneficial pests).

So Terminator is Anil’s dominant strategy.

You can check, similarly, that Terminator is also a dominant strategy for Bala.

Because Terminator is the dominant strategy for both, we predict that both will use it. Both players using insecticide is the dominant strategy equilibrium of the game.

- prisoners’ dilemma

- A game in which the payoffs in the dominant strategy equilibrium are lower for each player, and also lower in total, than if neither player played the dominant strategy.

Anil and Bala each receive payoffs of 2. But both would be better off if they both used IPC instead. So the predicted outcome is not the best feasible outcome. The pest control game is a particular example of a game called the prisoners’ dilemma.

The prisoners’ dilemma

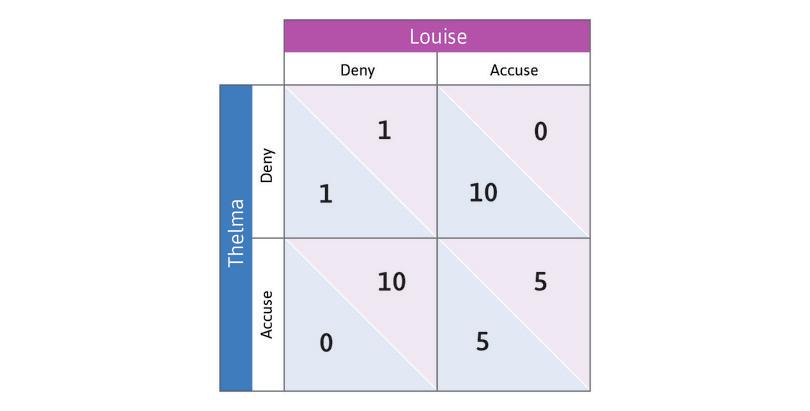

The name of this game comes from a story about two prisoners (we call them Thelma and Louise) whose strategies are either to Accuse (implicate) the other in a crime that the prisoners may have committed together, or Deny that the other prisoner was involved.

If both Thelma and Louise deny it, they are freed after a few days of questioning.

If one person accusing the other person, while the other person denies, the accuser will be freed immediately (a sentence of zero years), whereas the other person gets a long jail sentence (10 years).

Lastly, if both Thelma and Louise choose Accuse (meaning each implicates the other), they both get a jail sentence. This sentence is reduced from 10 years to 5 years because of their cooperation with the police. The payoffs of the game are shown in Figure 4.4.

(The payoffs are written in terms of years of prison—so Louise and Thelma prefer lower numbers.)

In a prisoners’ dilemma, both players have a dominant strategy (in this example, Accuse) which, when played by both, results in an outcome that is worse for both than if they had both adopted a different strategy (in this example, Deny).

Our story about Thelma and Louise is hypothetical, but this game applies to many real problems. For example, watch the clip from a TV quiz show called Golden Balls, and you will see how one ordinary person ingeniously resolves the prisoners’ dilemma.

In economic examples, the mutually beneficial strategy (Deny) is generally termed Cooperate, while the dominant strategy (Accuse) is called Defect. Cooperate does not mean that players get together and discuss what to do. The rules of the game are always that each player decides independently on a strategy.

The contrast between the invisible hand game and the prisoners’ dilemma shows that self-interest can lead to favourable outcomes, but can also lead to outcomes that nobody would endorse. Such examples can help us understand more precisely how markets can harness self-interest to improve the workings of the economy, but also the limitations of markets.

Three aspects of the interaction between Anil and Bala caused us to predict an unfortunate outcome in their prisoners’ dilemma game:

- They did not place any value on the payoffs of the other person, and so did not internalize (take account of) the costs that their actions inflicted on the other.

- There was no way that Anil, Bala or anyone else could make the farmer who used the insecticide pay for the harm that it caused.

- They were not able to make an agreement beforehand about what each would do. Had they been able to do so, they could have simply agreed to use IPC, or banned the use of Terminator.

If we can overcome one or more of these problems, the outcome preferred by both of them would sometimes result. So, in the rest of this unit, we will examine ways to do this.

Question 4.3 Choose the correct answer(s)

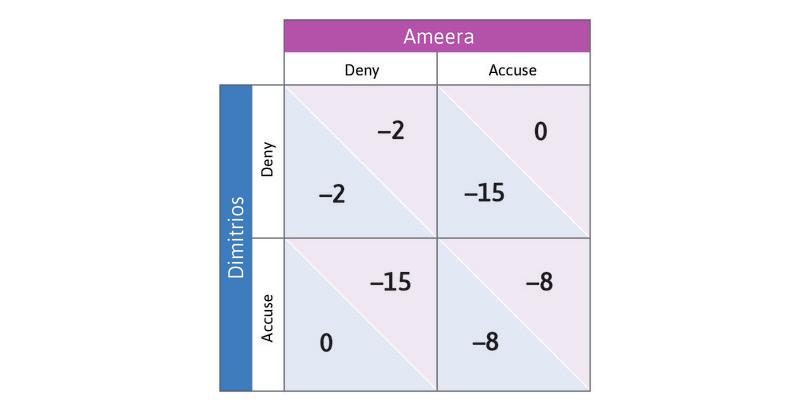

Dimitrios and Ameera work for an international investment bank as foreign exchange traders. They are being questioned by the police on their suspected involvement in a series of market manipulation trades. The table below shows the cost of each strategy (in terms of the length in years of jail sentences they will receive), depending on whether they accuse each other or deny the crime. The first number is the payoff to Dimitrios, while the second number is the payoff to Ameera (the negative numbers signify losses). Assume that the game is a simultaneous one-shot game.

Based on this information, we can conclude that:

- Denying is a dominated strategy for both Dimitrios and Ameera, so they will Accuse.

- For both Dimitrios and Ameera, Accusing is a dominant strategy. Therefore, the outcome in which they both Accuse and end up with 8-year sentences is a dominant strategy equilibrium.

- Accusing is Ameera’s best response regardless of what Dimitrios does, so she will always Accuse. It is a dominant strategy.

- This outcome can only happen if both Dimitrios and Ameera Deny. Denying is a dominated strategy for both of them, so this would never happen.

Exercise 4.2 Political advertising

Many people consider political advertising (campaign advertisements) to be a classic example of a prisoners’ dilemma.

- Using examples from a recent political campaign with which you are familiar, explain whether this is the case.

- Write down an example payoff matrix for this case.

4.4 Social preferences: Altruism

When students play one-shot prisoners’ dilemma games in classroom or laboratory experiments—sometimes for substantial sums of real money—it is common to observe half or more of the participants playing the Cooperate rather than Defect strategy, despite mutual defection being the dominant strategy for players who care only about their own monetary payoffs. One interpretation of these results is that players are altruistic.

For example, if Anil had cared sufficiently about the harm that he would inflict on Bala by using Terminator when Bala was using IPC, then IPC would have been Anil’s best response to Bala’s IPC. And if Bala had felt the same way, then IPC would have been a mutual best response, and the two would no longer have been in a prisoners’ dilemma.

A person who is willing to bear a cost in order to help another person is said to have altruistic preferences. In the example just given, Anil was willing to give up 1 payoff unit because that would have imposed a loss of 2 on Bala. His opportunity cost of choosing IPC when Bala had chosen IPC was 1, and it conferred a benefit of 2 on Bala, meaning that he had acted altruistically.

- social preferences

- Preferences that place a value on what happens to other people, and on acting morally, even if it results in lower payoffs for the individual.

The economic models we used in Unit 3 assumed self-interested preferences: Alexei, the student, and Angela, the farmer, cared about their own free time and their own grades or consumption. People generally do not care only about what happens to themselves, but also what happens to others. Then we say that the individual has social preferences. Altruism is an example of a social preference. Spite and envy are also social preferences.

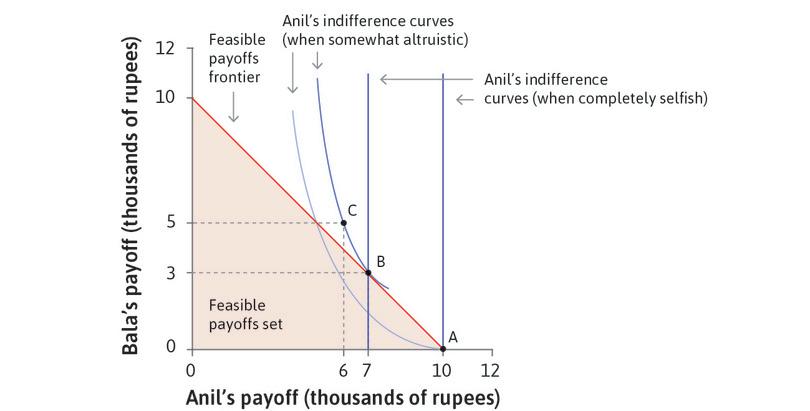

Altruistic preferences as indifference curves

In previous units, we used indifference curves and feasible sets to model Alexei’s and Angela’s behaviour. We can do the same to study how people interact when social preferences are part of their motivation.

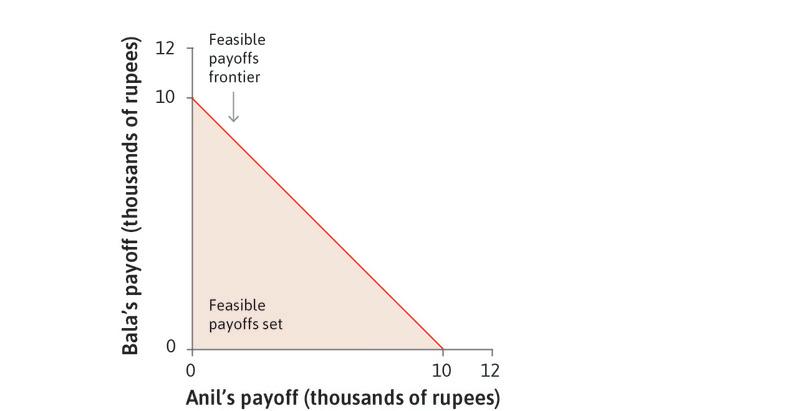

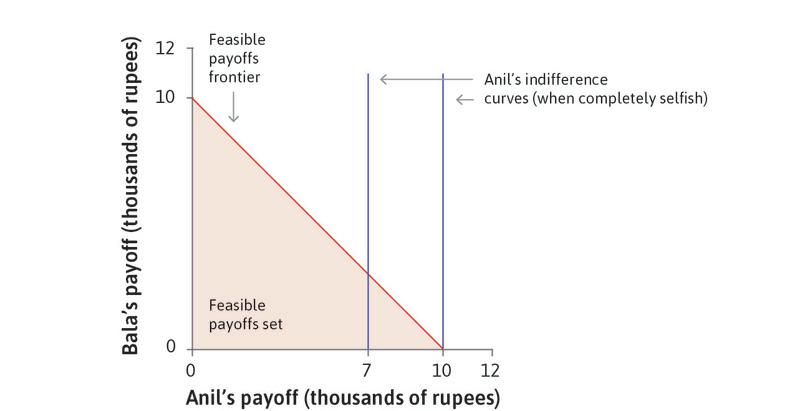

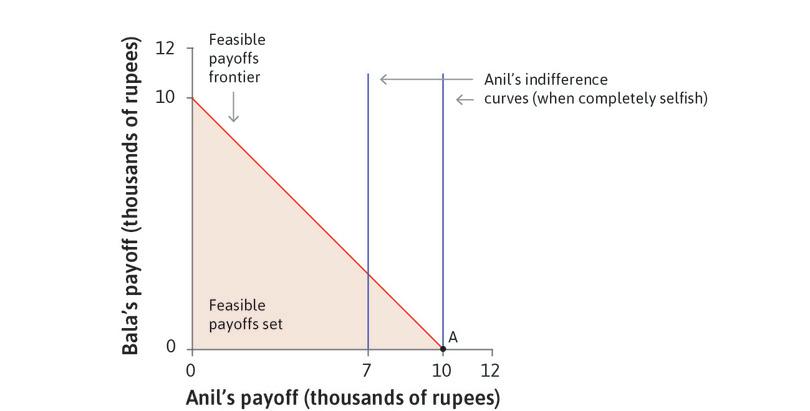

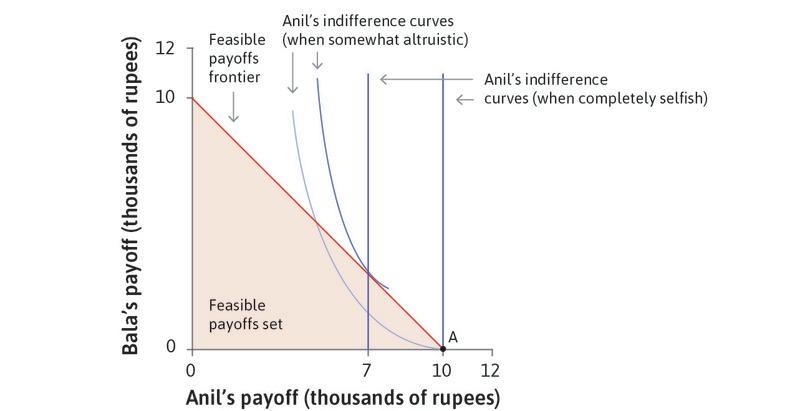

Imagine the following situation. Anil was given some tickets for the national lottery, and one of them won a prize of 10,000 rupees. He can, of course, keep all the money for himself, but he can also share some of it with his neighbour Bala. Figure 4.5 represents the situation graphically. The horizontal axis represents the amount of money (in thousands of rupees) that Anil keeps for himself, and the vertical one the amount that he gives to Bala. Each point (x, y) represents a combination of amounts of money for Anil (x) and Bala (y) in thousands of rupees. The shaded triangle depicts the feasible choices for Anil. At the corner (10, 0) on the horizontal axis, Anil keeps it all. At the other corner (0, 10) on the vertical axis, Anil gives it all to Bala. Anil’s feasible set is the shaded area.

- zero sum game

- A game in which the payoff gains and losses of the individuals sum to zero, for all combinations of strategies they might pursue.

The boundary of the shaded area is the feasible frontier. If Anil is dividing up his prize money between himself and Bala, he chooses a point on that frontier (being inside the frontier would mean throwing away some of the money). The choice among points on the feasible frontier is called a zero sum game because, when choosing point B rather than point A as in Figure 4.5, the sum of Anil’s losses and Bala’s gains is zero (for example, Anil has 3,000 fewer rupees at B than at A, and Bala has 3,000 rupees at B and nothing at A).

Anil’s preferences can be represented by indifference curves, showing combinations of the amounts for Anil and Bala that are all equally preferred by Anil. Figure 4.5 illustrates two cases. In the first, Anil has self-interested preferences so his indifference curves are straight vertical lines; in the second he is somewhat altruistic—he cares about Bala—so his indifference curves are downward-sloping.

If Anil is self-interested, the best option given his feasible set is A, where he keeps all the money. If he derives utility from Bala’s consumption, he has downward-sloping indifference curves so he may prefer an outcome where Bala gets some of the money.

Leibniz: Finding the optimal distribution with altruistic preferences

With the specific indifference curves shown in Figure 4.5, the best feasible option for Anil is point B (7, 3) where Anil keeps 7,000 rupees and gives 3,000 to Bala. Anil prefers to give 3,000 rupees to Bala, even at a cost of 3,000 rupees to him. This is an example of altruism: Anil is willing to bear a cost to benefit somebody else.

Exercise 4.3 Altruism and selflessness

Using the same axes as in Figure 4.5:

- What would Anil’s indifference curves look like if he cared just as much about Bala’s consumption as his own?

- What would they look like if he derived utility only from the total of his and Bala’s consumption?

- What would they look like if he derived utility only from Bala’s consumption?

- For each of these cases, provide a real world situation in which Anil might have these preferences, making sure to specify how Anil and Bala derive their payoffs.

Question 4.4 Choose the correct answer(s)

In Figure 4.5 Anil has just won the lottery and has received 10,000 rupees. He is considering how much (if at all) he would like to share this sum with his friend Bala. Before he manages to share his winnings, Anil receives a tax bill for these winnings of 3,000 rupees. Based on this information, which of the following statements is true?

- Without the tax Anil would have given exactly 3,000 rupees to Bala. With the total income now at 7,000 rupees, Anil will choose to give less than this.

- We assume that preferences are fixed. Hence Anil will remain somewhat altruistic and give Bala some of his winnings.

- The tax bill can be depicted as an inward shift of the feasible frontier. Therefore, Anil’s optimal choice will result in him being on a lower indifference curve than before.

- Bala would have received 10,000 and 7,000 rupees respectively before and after the tax bill.

4.5 Altruistic preferences in the prisoners’ dilemma

When Anil and Bala wanted to get rid of pests (Section 4.3), they found themselves in a prisoners’ dilemma. One reason for the unfortunate outcome was that they did not account for the costs that their actions inflicted on the other. The choice of pest control regime using the insecticide implied a free ride on the other farmer’s contribution to ensuring clean water.

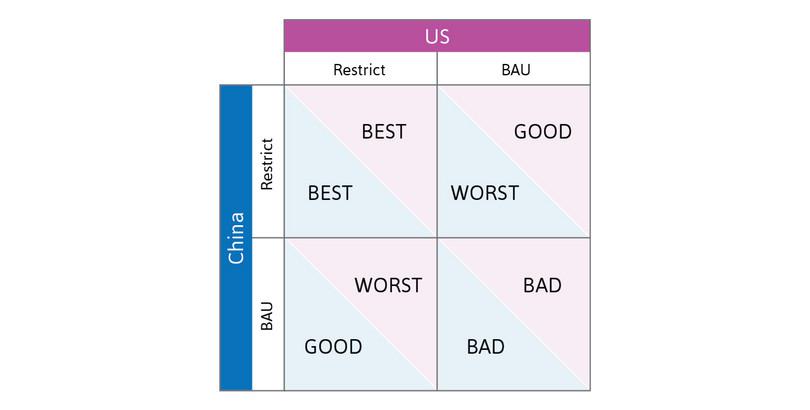

If Anil cares about Bala’s wellbeing as well as his own, the outcome can be different.

In Figure 4.6 the two axes now represent Anil and Bala’s payoffs. Just as with the example of the lottery, the diagram shows the feasible outcomes. However, in this case the feasible set has only four points. We have shortened the names of the strategies for convenience: Terminator is T, IPC is I. Notice that movements upward and to the right from (T, T) to (I, I) are win-win: both get higher payoffs. On the other hand, moving up, and to the left, or down, and to the right—from (I, T) to (T, I) or the reverse—are win-lose changes. Win-lose means that Bala gets a higher payoff at the expense of Anil, or Anil benefits at the expense of Bala.

As in the case of dividing lottery winnings, we look at two cases. If Anil does not care about Bala’s wellbeing, his indifference curves are vertical lines. If he does care, he has downward-sloping indifference curves. Work through Figure 4.6 to see what will happen in each case.

Figure 4.6 demonstrates that when Anil is completely self-interested, his dominant strategy is Terminator (as we saw before). But if Anil cares sufficiently about Bala, his dominant strategy is IPC. If Bala feels the same way, then the two would both choose IPC, resulting in the outcome that both of them prefer the most.

The main lesson is that if people care about one another, social dilemmas are easier to resolve. This helps us understand the historical examples in which people mutually cooperate for irrigation or enforce the Montreal Protocol to protect the ozone layer, rather than free riding on the cooperation of others.

Question 4.5 Choose the correct answer(s)

Figure 4.6 shows Anil’s preferences when he is completely selfish, and also when he is somewhat altruistic, when he and Bala participate in the prisoners’ dilemma game.

Based on the graph, we can say that:

- (T, I) is on a ‘higher’ vertical indifference curve than (I, I) (that is, it is further to the right) and (T, T) is on a higher vertical indifference curve that (I, T). So using Terminator is a dominant strategy for Anil when he is completely selfish.

- When Anil is somewhat altruistic, (I, I) is on a higher indifference curve than (T, I), and (I, T) is on a higher indifference curve than (T, T). So using IPC is Anil’s dominant strategy.

- Terminator is a dominant strategy for both players, so (T, T) is a dominant strategy equilibrium. Anil would prefer (T, I) but Bala will never choose IPC.

- IPC is a dominant strategy for Anil when he is somewhat altruistic. If Bala has the same preferences IPC will be a dominant strategy for him too, so (I, I) is the dominant strategy equilibrium.

Exercise 4.4 Amoral self-interest

Imagine a society in which everyone was entirely self-interested (cared only about his or her own wealth) and amoral (followed no ethical rules that would interfere with gaining that wealth). How would that society be different from the society you live in? Consider the following:

- families

- workplaces

- neighbourhoods

- traffic

- political activity (would people vote?)

4.6 Public goods, free riding, and repeated interaction

Now let’s look at the second reason for an unfortunate outcome in the prisoners’ dilemma game. There was no way that either Anil or Bala (or anyone else) could make whoever used the insecticide pay for the harm that it caused.

The problems of Anil and Bala are hypothetical, but they capture the real dilemmas of free riding that many people around the world face. For example, as in Spain, many farmers in southeast Asia rely on a shared irrigation facility to produce their crops. The system requires constant maintenance and new investment. Each farmer faces the decision of how much to contribute to these activities. These activities benefit the entire community and if the farmer does not volunteer to contribute, others may do the work anyway.

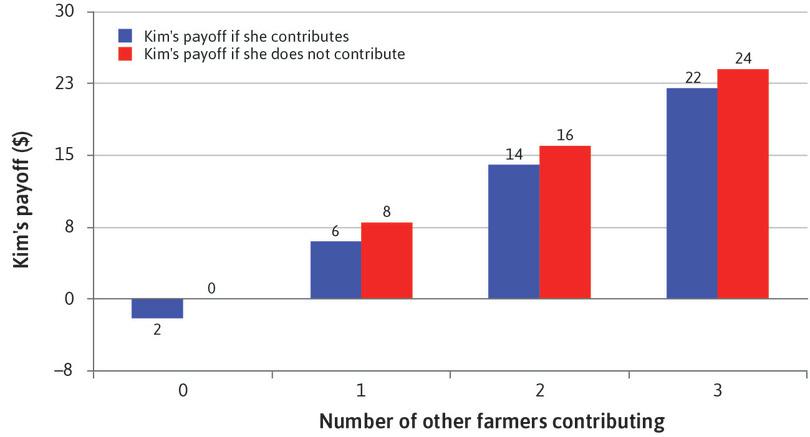

Imagine there are four farmers who are deciding whether to contribute to the maintenance of an irrigation project.

- public good

- A good for which use by one person does not reduce its availability to others. Also known as: non-rival good. See also: non-excludable public good, artificially scarce good.

For each farmer, the cost of contributing to the project is $10. But when one farmer contributes, all four of them will benefit from an increase in their crop yields made possible by irrigation, so they will each gain $8. Contributing to the irrigation project is called a public good: when one individual bears a cost to provide the good, everyone receives a benefit.

Now, consider the decision facing Kim, one of the four farmers. Figure 4.7 shows how her decision depends on her total earnings, but also on the number of other farmers who decide to contribute to the irrigation project.

For example, if two of the others contribute, Kim will receive a benefit of $8 from each of their contributions. So if she makes no contribution herself, her total payoff, shown in red, is $16. If she decides to contribute, she will receive an additional benefit of $8 (and so will the other three farmers). But she will incur a cost of $10, so her total payoff is $14, as in Figure 4.7, and as calculated in Figure 4.8.

| Benefit from the contribution of others | 16 | |

| Plus benefit from her own contribution | + | 8 |

| Minus cost of her contribution | – | 10 |

| Total | $14 |

Example: When two others contribute, Kim’s payoff is lower if she contributes too.

Example: When two others contribute, Kim’s payoff is lower if she contributes too.

Figure 4.8 Example: When two others contribute, Kim’s payoff is lower if she contributes too.

Figures 4.7 and 4.8 illustrate the social dilemma. Whatever the other farmers decide to do, Kim makes more money if she doesn’t contribute than if she does. Not contributing is a dominant strategy. She can free ride on the contributions of the others.

This public goods game is a prisoners’ dilemma in which there are more than two players. If the farmers care only about their own monetary payoff, there is a dominant strategy equilibrium in which no one contributes and their payoffs are all zero. On the other hand, if all contributed, each would get $22. Everyone would benefit if everyone cooperated, but irrespective of what others do, each farmer does better by free riding on the others.

Altruism could help to solve the free rider problem: if Kim cared about the other farmers, she might be willing to contribute to the irrigation project. But if large numbers of people are involved in a public goods game, it is less likely that altruism will be sufficient to sustain a mutually beneficial outcome.

Yet around the world, real farmers and fishing people have faced public goods situations in many cases with great success. The evidence gathered by Elinor Ostrom, a political scientist, and other researchers on common irrigation projects in India, Nepal, and other countries, shows that the degree of cooperation varies. In some communities a history of trust encourages cooperation. In others, cooperation does not happen. In south India, for example, villages with extreme inequalities in land and caste status had more conflicts over water usage. Less unequal villages maintained irrigation systems better: it was easier to sustain cooperation.8

Great economists Elinor Ostrom

The choice of Elinor Ostrom (1933–2012), a political scientist, as a co-recipient of the 2009 Nobel Prize surprised most economists. For example, Steven Levitt, a professor at the University of Chicago, admitted he knew nothing about her work, and had ‘no recollection of ever seeing or hearing her name mentioned by an economist’.

Some, however, vigorously defended the decision. Vernon Smith, an experimental economist who had previously been awarded the Prize, congratulated the Nobel committee for recognizing her originality, ‘scientific common sense’ and willingness to listen ‘carefully to data’.

Ostrom’s entire academic career was focused on a concept that plays a central role in economics but is seldom examined in much detail: property. Ronald Coase had established the importance of clearly delineated property rights when one person’s actions affected the welfare of others. But Coase’s main concern was the boundary between the individual and the state in regulating such actions. Ostrom explored the middle ground where communities, rather than individuals or formal governments, held property rights.

The conventional wisdom at the time was that informal collective ownership of resources would lead to a ‘tragedy of the commons’. That is, economists believed that resources could not be used efficiently and sustainably under a common property regime. Thanks to Elinor Ostrom this is no longer a dominant view.

First, she made a distinction between resources held as common property and those subject to open access:

- Common property involves a well-defined community of users who are able in practice, if not under the law, to prevent outsiders from exploiting the resource. Inshore fisheries, grazing lands, or forest areas are examples.

- Open-access resources such as ocean fisheries or the atmosphere as a carbon sink, can be exploited without restrictions, other than those imposed by states acting alone or through international agreements.

- social norm

- An understanding that is common to most members of a society about what people should do in a given situation when their actions affect others.

Ostrom was not alone in stressing this distinction, but she drew on a unique combination of case studies, statistical methods, game theoretic models with unorthodox ingredients, and laboratory experiments to try to understand how tragedies of the commons could be averted.

She discovered great diversity in how common property is managed. Some communities were able to devise rules and draw on social norms to enforce sustainable resource use, while others failed to do so. Much of her career was devoted to identifying the criteria for success, and using theory to understand why some arrangements worked well while others did not.

Many economists believed that the diversity of outcomes could be understood using the theory of repeated games, which predicts that even when all individuals care only for themselves, if interactions are repeated with sufficiently high likelihood and individuals are patient enough, then cooperative outcomes can be sustained indefinitely.

But this was not a satisfying explanation for Ostrom, partly because the same theory predicted that any outcome, including rapid depletion, could also arise.

More importantly, Ostrom knew that sustainable use was enforced by actions that clearly deviated from the hypothesis of material self-interest. In particular, individuals would willingly bear considerable costs to punish violators of rules or norms. As the economist Paul Romer put it, she recognized the need to ‘expand models of human preferences to include a contingent taste for punishing others’.

Ostrom developed simple game theoretic models in which individuals have unorthodox preferences, caring directly about trust and reciprocity. And she looked for the ways in which people faced with a social dilemma avoided tragedy by changing the rules so that the strategic nature of the interaction was transformed.

She worked with economists to run a pioneering series of experiments, confirming the widespread use of costly punishment in response to excessive resource extraction, and also demonstrated the power of communication and the critical role of informal agreements in supporting cooperation. Thomas Hobbes, a seventeenth-century philosopher, had asserted that agreements had to be enforced by governments, since ‘covenants, without the sword, are but words and of no strength to secure a man at all’. Ostrom disagreed. As she wrote in the title of an influential article, covenants—even without a sword—make self-governance possible.9

Social preferences partly explain why these communities avoid Garrett Hardin’s tragedy of the commons. But they may also find ways of deterring free-riding behaviour.

Repeated games

Free riding today on the contributions of other members of one’s community may have unpleasant consequences tomorrow or years from now. Ongoing relationships are an important feature of social interactions that was not captured in the models we have used so far: life is not a one-shot game.

The interaction between Anil and Bala in our model was a one-shot game. But as owners of neighbouring fields, Anil and Bala are more realistically portrayed as interacting repeatedly.

Imagine how differently things would work out if we represented their interaction as a game to be repeated each season. Suppose that Bala has adopted IPC. What is Anil’s best response? He would reason like this:

- Anil

- If I play IPC, then maybe Bala will continue to do so, but if I use Terminator—which would raise my profits this season—Bala would use Terminator next year. So unless I am extremely impatient for income now, I’d better stick with IPC.

Bala could reason in exactly the same way. The result might be that they would then continue playing IPC forever.

In the next section, we will look at experimental evidence of how people behave when a public goods game is repeated.

Question 4.6 Choose the correct answer(s)

Four farmers are deciding whether to contribute to the maintenance of an irrigation project. For each farmer, the cost of contributing to the project is $10. But when one farmer contributes, all four of them will benefit from an increase in their crop yields, so they will each gain $8.

Which of the following statements is correct?

- Do Not Contribute is a dominant strategy for all the farmers: whatever the others do, their own benefit from contributing is $8, but the cost is $10.

- In this case she will gain $16 from contributing, which is higher than the cost.

- Whatever Kim does, the dominant strategy for a selfish farmer is Do Not Contribute.

- If the farmers have an ongoing relationship they may all decide to contribute, to gain the future benefits of continued cooperation. If any of the neighbours failed to contribute in any year, cooperation would break down. Knowing this, they would have an incentive to contribute in the present.

4.7 Public good contributions and peer punishment

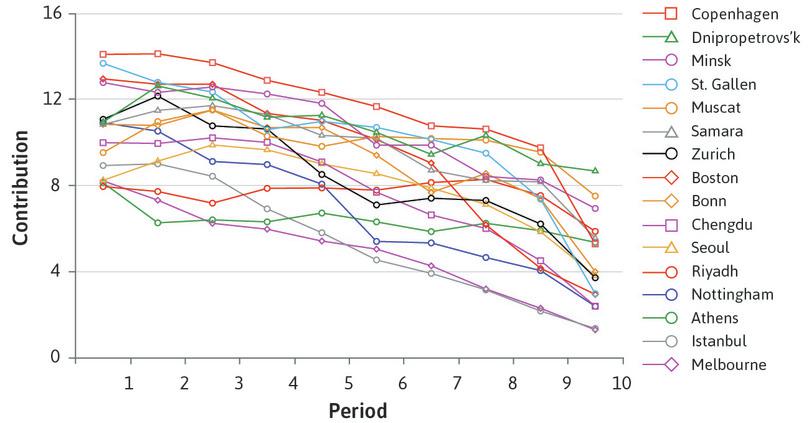

An experiment demonstrates that people can sustain high levels of cooperation in a public goods game, as long as they have opportunities to target free riders once it becomes clear who is contributing less than the norm.

Figure 4.9a shows the results of laboratory experiments that mimic the costs and benefits from contribution to a public good in the real world. The experiments were conducted in cities around the world. In each experiment participants play 10 rounds of a public goods game, similar to the one involving Kim and the other farmers that we just described. In each round, the people in the experiment (we call them subjects) are given $20. They are randomly sorted into small groups, typically of four people, who don’t know each other. They are asked to decide on a contribution from their $20 to a common pool of money. The pool is a public good. For every dollar contributed, each person in the group receives $0.40, including the contributor.

Imagine that you are playing the game, and you expect the other three members of your group each to contribute $10. Then if you don’t contribute, you will get $32 (three returns of $4 from their contributions, plus the initial $20 that you keep). The others have paid $10, so they only get $32 – $10 = $22 each. On the other hand, if you also contribute $10, then everyone, including you, will get $22 + $4 = $26. Unfortunately for the group, you do better by not contributing—that is, because the reward for free riding ($32) is greater than for contributing ($26). And, unfortunately for you, the same applies to each of the other members.

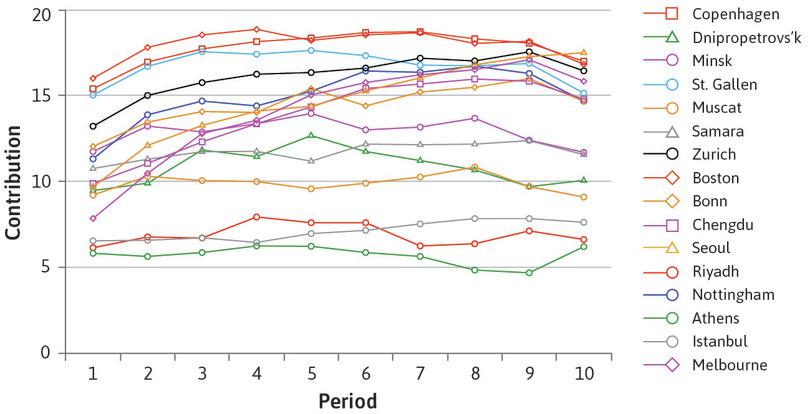

After each round, the participants are told the contributions of other members of their group. In Figure 4.9a, each line represents the evolution over time of average contributions in a different location around the world. Just as in the prisoners’ dilemma, people are definitely not solely self-interested.

Worldwide public goods experiments: Contributions over 10 periods.

Worldwide public goods experiments: Contributions over 10 periods.

Figure 4.9a Worldwide public goods experiments: Contributions over 10 periods.

Benedikt Herrmann, Christian Thoni, and Simon Gachter. 2008. ‘Antisocial Punishment Across Societies’. Science 319 (5868): pp. 1362–67.

As you can see, players in Chengdu contributed $10 in the first round, just as we described above. In every population where the game was played, contributions to the public good were high in the first period, although much more so in some cities (Copenhagen) than in others (Melbourne). This is remarkable: if you care only about your own payoff, contributing nothing at all is the dominant strategy. The high initial contributions could have occurred because the participants in the experiment valued their contribution to the payoffs that others received (they were altruistic). But the difficulty (or, as Hardin would have described it, the tragedy) is obvious. Everywhere, the contributions to the public good decreased over time.

Nevertheless, the results also show that despite a large variation across societies, most of them still have high contribution levels at the end of the experiment.

The most plausible explanation of the pattern is not altruism. It is likely that contributors decreased their level of cooperation if they observed that others were contributing less than expected and were therefore free riding on them. It seems as if those people who contributed more than the average liked to punish the low contributors for their unfairness, or for violating a social norm of contributing. Since the payoffs of free riders depend on the total contribution to the public good, the only way to punish free riders in this experiment was to stop contributing. This is the tragedy of the commons.

Many people are happy to contribute as long as others reciprocate. A disappointed expectation of reciprocity is the most convincing reason that contributions fell so regularly in later rounds of this game.

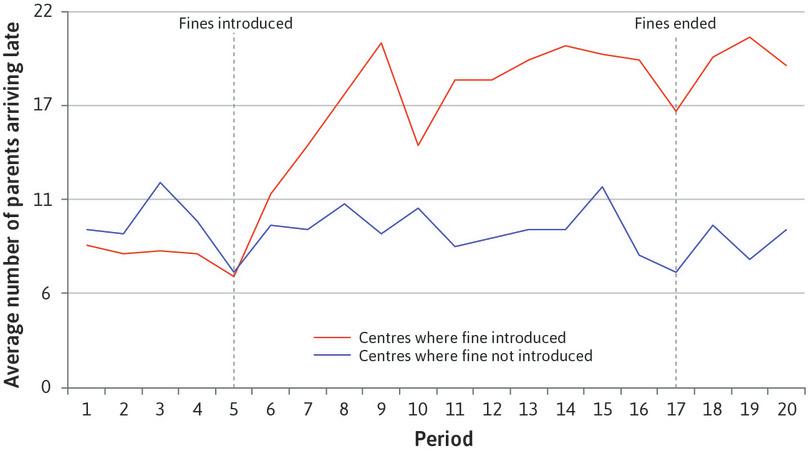

To test this, the experimenters took the public goods game experiment shown in Figure 4.9a and introduced a punishment option. After observing the contributions of their group, individual players could pay to punish other players by making them pay a $3 fine. The punisher remained anonymous, but had to pay $1 per player punished. The effect is shown in Figure 4.9b. For the majority of subjects, including those in China, South Korea, northern Europe and the English-speaking countries, contributions increased when they had the opportunity to punish free riders.

Worldwide public goods experiments with opportunities for peer punishment.

Worldwide public goods experiments with opportunities for peer punishment.

Figure 4.9b Worldwide public goods experiments with opportunities for peer punishment.

Benedikt Herrmann, Christian Thoni, and Simon Gachter. 2008. ‘Antisocial Punishment Across Societies’. Science 319 (5868): pp. 1362–67.

People who think that others have been unfair or have violated a social norm may retaliate, even if the cost to themselves is high. Their punishment of others is a form of altruism, because it costs them something to help deter free riding behaviour that is detrimental to the wellbeing of most members of the group.

This experiment illustrates the way that, even in large groups of people, a combination of repeated interactions and social preferences can support high levels of contribution to the public good.

The public goods game, like the prisoners’ dilemma, is a situation in which there is something to gain for everyone by engaging with others in a common project such as pest control, maintaining an irrigation system, or controlling carbon emissions. But there is also something to lose when others free ride.

4.8 Behavioural experiments in the lab and in the field

To understand economic behaviour, we need to know about people’s preferences. In the previous unit, for example, students and farmers valued free time. How much they valued it was part of the information we needed to predict how much time they spend studying and farming.

- revealed preference

- A way of studying preferences by reverse engineering the motives of an individual (her preferences) from observations about her or his actions.

In the past, economists have learned about our preferences from:

- Survey questions: To determine political preferences, brand loyalty, degree of trust of others, or religious orientation.

- Statistical studies of economic behaviour: For example, purchases of one or more goods when the relative price varies—to determine preferences for the goods in question. One strategy is to reverse-engineer what the preferences must have been, as revealed by purchases. This is called revealed preference.

Surveys have a problem. Asking someone if they like ice cream will probably get an honest answer. But the answer to the question: ‘How altruistic are you?’ may be a mixture of truth, self-advertising, and wishful thinking. Statistical studies cannot control the decision-making environment in which the preferences were revealed, so it is difficult to compare the choices of different groups.

This is why economists sometimes use experiments, so that people’s behaviour can be observed under controlled conditions.

How economists learn from facts Laboratory experiments

Behavioural experiments have become important in the empirical study of preferences.10 Part of the motivation for experiments is that understanding someone’s motivations (altruism, reciprocity, inequality aversion as well as self-interest) is essential to being able to predict how they will behave as employees, family members, custodians of the environment, and citizens.

Experiments measure what people do rather than what they say. Experiments are designed to be as realistic as possible, while controlling the situation:

- Decisions have consequences: The decisions in the experiment may decide how much money the subjects earn by taking part. Sometimes the stakes can be as high as a month’s income.

- Instructions, incentives and rules are common to all subjects: There is also a common treatment. This means that if we want to compare two groups, the only difference between the control and treatment groups is the treatment itself, so that its effects can be identified.

- Experiments can be replicated: They are designed to be implementable with other groups of participants.

- Experimenters attempt to control for other possible explanations: Other variables are kept constant wherever possible, because they may affect the behaviour we want to measure.

This means that when people behave differently in the experiment, it is likely due to differences in their preferences, not in the situation that each person faces.

Economists have studied public goods extensively using laboratory experiments in which the subjects are asked to make decisions about how much to contribute to a public good. In some cases, economists have designed experiments that closely mimic real-world social dilemmas. The work of Juan Camilo Cárdenas, an economist at the Universidad de los Andes in Bogotá, Colombia is an example. He performs experiments about social dilemmas with people who are facing similar problems in their real life, such as overexploitation of a forest or a fish stock. In our ‘Economist in action’ video he describes his use of experimental economics in real-life situations, and how it helps us understand why people cooperate even when there are apparent incentives not to do so.

Economists have discovered that the way people behave in experiments can be used to predict how they react in real-life situations. For example, fishermen in Brazil who acted more cooperatively in an experimental game also practiced fishing in a more sustainable manner than the fishermen who were less cooperative in the experiment.

For a summary of the kinds of experiments that have been run, the main results, and whether behaviour in the experimental lab predicts real-life behaviour, read the research done by some of the economists who specialize in experimental economics. For example, Colin Camerer and Ernst Fehr,10 Armin Falk and James Heckman,11 or the experiments done by Joseph Heinrich and a large team of collaborators around the world.12

In Exercise 4.5, however, Stephen Levitt and John List ask whether people would behave the same way in the street as they do in the laboratory.

Question 4.7 Choose the correct answer(s)

According to the ‘Economist in action’ video of Juan Camilo Cárdenas, which of the following have economists discovered using experiments simulating public goods scenarios?

- This is one of the findings that Professor Cárdenas mentions.

- Professor Cárdenas finds that populations with greater inequality exhibit less trust and cooperation.

- Cooperative behaviour occurs even when experimental participants are offered real cash as in Professor Cárdenas’ experiments.

- This is one of the findings that Professor Cárdenas mentions.

Exercise 4.5 Are lab experiments always valid?

In 2007, Steven Levitt and John List published a paper called ‘What Do Laboratory Experiments Measuring Social Preferences Reveal about the Real World?’. Read the paper to answer these two questions.

- According to their paper,13 why and how might people’s behaviour in real life vary from what has been observed in laboratory experiments?

- Using the example of the public goods experiment in this section, explain why you might observe systematic differences between the observations recorded in Figures 4.9a and 4.9b, and what might happen in real life.

Sometimes it is possible to conduct experiments ‘in the field’: that is, to deliberately change the economic conditions under which people make decisions, and observe how their behaviour changes. An experiment conducted in Israel in 1998 demonstrated that social preferences may be very sensitive to the context in which decisions are made.

It is common for parents to rush to pick up their children from daycare. Sometimes a few parents are late, making teachers stay extra time. What would you do to deter parents from being late? Two economists ran an experiment introducing fines in some daycare centres but not others (these were used as controls). The ‘price of lateness’ went from zero to ten Israeli shekels (about $3 at the time). Surprisingly, after the fine was introduced, the frequency of late pickups doubled. The top line in Figure 4.10 illustrates this.

Average number of late-coming parents, per week.

Average number of late-coming parents, per week.

Figure 4.10 Average number of late-coming parents, per week.

Uri Gneezy and Aldo Rustichini. 2000. ‘A Fine Is a Price’. The Journal of Legal Studies 29 (January): pp. 1–17.

Why did putting a price on lateness backfire?

One possible explanation is that before the fine was introduced, most parents were on time because they felt that it was the right thing to do. In other words, they came on time because of a moral obligation to avoid inconveniencing the daycare staff. Perhaps they felt an altruistic concern for the staff, or regarded a timely pick-up as a reciprocal responsibility in the joint care of the child. But the imposition of the fine signalled that the situation was really more like shopping. Lateness had a price and so could be purchased, like vegetables or ice-cream.14

- crowding out

- There are two quite distinct uses of the term. One is the observed negative effect when economic incentives displace people’s ethical or other-regarding motivations. In studies of individual behaviour, incentives may have a crowding out effect on social preferences. A second use of the term is to refer to the effect of an increase in government spending in reducing private spending, as would be expected for example in an economy working at full capacity utilization, or when a fiscal expansion is associated with a rise in the interest rate.

The use of a market-like incentive—the price of lateness—had provided what psychologists call a new ‘frame’ for the decision, making it one in which self-interest rather than concern for others was acceptable. When fines and prices have these unintended effects, we say that incentives have crowded out social preferences. Even worse, you can also see from Figure 4.10 that when the fine was removed, parents continued to pick up their children late.

Question 4.8 Choose the correct answer(s)

Figure 4.10 depicts the average number of late-coming parents per week in day-care centres, where a fine was introduced in some centres and not in others. The fines were eventually abolished, as indicated on the graph.

Based on this information, which of the following statements is correct?

- The graph shows that the number of late-coming parents more than doubled in the centres where the fine was introduced.

- The parents paid the fine if they were late and not otherwise. So it can be considered as a price for lateness.

- The graph shows that the number of late-coming parents remained high after the fine was abolished, so it is possible that the experiment had a permanent effect.

- The crowding out of the social preference occurs when the moral obligation of not being late is replaced by the market-like incentive of purchasing the right to be late without ethical qualms. This is evident in the graph immediately after the introduction of the fines.

Exercise 4.6 Crowding out

Imagine you are the mayor of a small town and wish to motivate your citizens to get involved in ‘City Beautiful Day’, in which people spend one day to help cleaning parks and roads.

How would you design the day to motivate citizens to take part?

4.9 Cooperation, negotiation, conflicts of interest, and social norms

- cooperation

- Participating in a common project that is intended to produce mutual benefits.

Cooperation means participating in a common project in such a way that mutual benefits occur. Cooperation need not be based on an agreement. We have seen examples in which players acting independently can still achieve a cooperative outcome:

- The invisible hand: Anil and Bala chose their crops in pursuit of their own interests. Their engagement in the village market resulted in a mutually beneficial division of labour.

- The repeated prisoners’ dilemma: They may refrain from using Terminator for pest control because they recognize the future losses they would suffer as a result of abandoning IPC.

- The public goods game: Players’ willingness to punish others sustained high levels of cooperation in many countries, without the need for agreements.

In other cases, such as the one-shot prisoners’ dilemma, independent actions led to an unfortunate outcome. Then, the players could do better if they could reach an agreement.

People commonly resort to negotiation to solve their economic and social problems. For example, international negotiation resulted in the Montreal Protocol, through which countries agreed to eliminate the use of chlorofluorocarbons (CFCs), in order to avoid a harmful outcome (the destruction of the ozone layer).

But negotiation does not always succeed, sometimes because of conflicts of interest over how the mutual gains to cooperation will be shared. The success of the Montreal Protocol contrasts with the relative failure of the Kyoto Protocol in reducing carbon emissions responsible for global warming. The reasons are partly scientific. The alternative technologies to CFCs were well-developed and the benefits relative to costs for large industrial countries, such as the US, were much clearer and larger than in the case of greenhouse gas emissions. But one of the obstacles to agreement at the Copenhagen climate change summit in 2009 was over how to share the costs and benefits of limiting emissions between developed and developing countries.

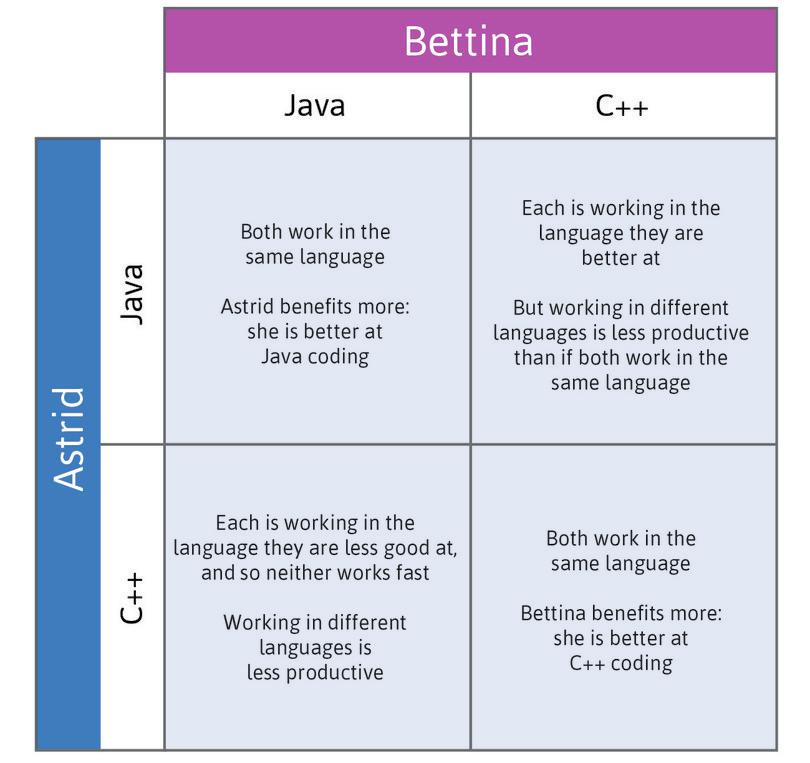

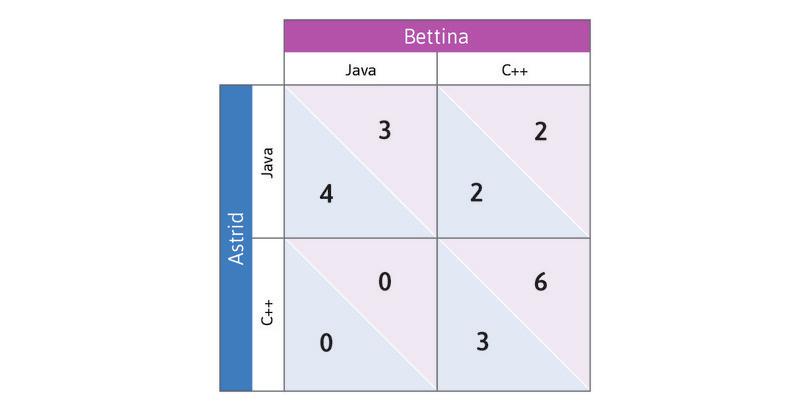

As a simpler example of a conflict of interest, consider a professor who might be willing to hire a student as a research assistant for the summer. In principle, both have something to gain from the relationship, because this might also be a good opportunity for the student to earn some money and learn. In spite of the potential for mutual benefit, there is also some room for conflict. The professor may want to pay less and have more of his research grant left over to buy a new computer, or he may need the work to be done quickly, meaning the student can’t take time off. After negotiating, they may reach a compromise and agree that the student can earn a small salary while working from the beach. Or, perhaps, the negotiation will fail.

There are many situations like this in economics. A negotiation (sometimes called bargaining) is also an integral part of politics, foreign affairs, law, social life and even family dynamics. A parent may give a child a smartphone to play with in exchange for a quiet evening, a country might consider giving up land in exchange for peace, or a government might be willing to negotiate a deal with student protesters to avoid political instability. As with the student and the professor, each of these bargains might not actually happen if either side is not willing to do these things.

Negotiation: Sharing mutual gains

To help think about what makes a deal work, consider the following situation. You and a friend are walking down an empty street and you see a $100 note on the ground. How would you decide how to split your lucky find? If you split the amount equally, perhaps this reflects a social norm in your community that says that something you get by luck should be split 50–50.

Dividing something of value in equal shares (the 50–50 rule) is a social norm in many communities, as is giving gifts on birthdays to close family members and friends. Social norms are common to an entire group of people (almost all follow them) and tell a person what they should do in the eyes of most people in the community.

In economics we think of people as making decisions according to their preferences, by which we mean all of the likes, dislikes, attitudes, feelings, and beliefs that motivate them. So everyone’s preferences are individual. They may be influenced by social norms, but they reflect what people want to do as well as what they think they ought to do.

We would expect that, even if there were a 50–50 norm in a community, some individuals might not respect the norm exactly. Some people may act more selfishly than the norm requires and others more generously. What happens next will depend both on the social norm (a fact about the world, which reflects attitudes to fairness that have evolved over long periods), but also on the specific preferences of the individuals concerned.

- fairness

- A way to evaluate an allocation based on one’s conception of justice.

Suppose the person who saw the money first has picked it up. There are at least three reasons why that person might give some of it to a friend:

- Altruism: We have already considered this reason, in the case of Anil and Bala. This person might be altruistic and care about the other being happy, or about another aspect of the other’s wellbeing.

- Fairness: Or, the person holding the money might think that 50–50 is fair. In this case, the person is motivated by fairness, or what economists term inequality aversion.

- Reciprocity: The friend may have been kind to the lucky money-finder in the past, or kind to others, and deserves to be treated generously because of this. In this case we say that our money-finder has reciprocal preferences.

These social preferences all influence our behaviour, sometimes working in opposite directions. For example, if the money-finder has strong fairness preferences but knows that the friend is entirely selfish, the fairness preferences tempt the finder to share but the reciprocity preferences push the finder to keep the money.

Question 4.9 Choose the correct answer(s)

Anastasia and Belinda’s favourite hobby is to go metal detecting. On one occasion Anastasia finds four Roman coins while Belinda is unsuccessful. Both women have reciprocal preferences. From this, can we say that:

- It depends how altruistic Anastasia is. She could be altruistic but give only one coin to Belinda.

- Because Anastasia has reciprocal preferences, she may want to punish Belinda for having been selfish in the past. So even if she is altruistic, she may derive greater satisfaction from punishment than sharing.

- Reciprocity means that Anastasia may still share, if she has benefitted from Belinda’s altruism in the past or hopes to benefit from it in the future.

- Anastasia’s altruism and desire not to go against Belinda’s standard of fairness—so as to not incur punishment—may or may not be sufficient to encourage her to split the find 50–50.

4.10 Dividing a pie (or leaving it on the table)

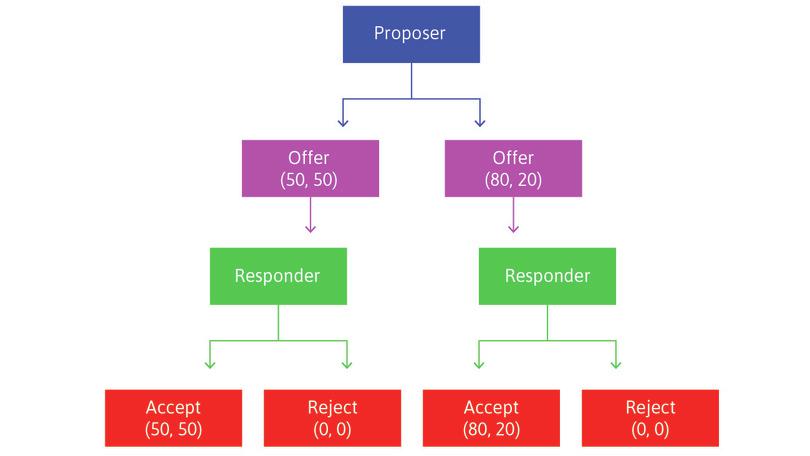

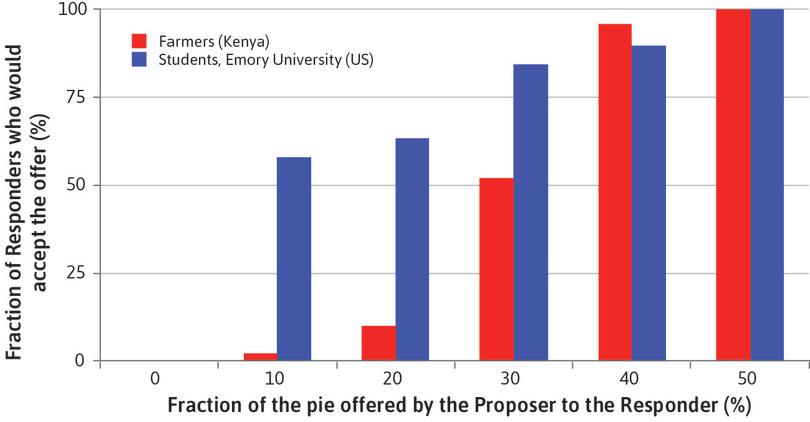

One of the most common tools to study social preferences is a two-person one-shot game known as the ultimatum game. It has been used around the world with experimental subjects including students, farmers, warehouse workers, and hunter-gatherers. By observing their choices we investigate the subjects’ preferences and motives, such as pure self-interest, altruism, inequality aversion, or reciprocity.

The subjects of the experiment are invited to play a game in which they will win some money. How much they win will depend on how they and the others in the game play. Real money is at stake in experimental games like these, otherwise we could not be sure the subjects’ answers to a hypothetical question would reflect their actions in real life.

The rules of the game are explained to the players. They are randomly matched in pairs, then one player is randomly assigned as the Proposer, and the other the Responder. The subjects do not know each other, but they know the other player was recruited to the experiment in the same way. Subjects remain anonymous.

The Proposer is provisionally given an amount of money, say $100, by the experimenter, and instructed to offer the Responder part of it. Any split is permitted, including keeping it all, or giving it all away. We will call this amount the ‘pie’ because the point of the experiment is how it will be divided up.

The split takes the form: ‘x for me, y for you’ where x + y = $100. The Responder knows that the Proposer has $100 to split. After observing the offer, the Responder accepts or rejects it. If the offer is rejected, both individuals get nothing. If it is accepted, the split is implemented: the Proposer gets x and the Responder y. For example, if the Proposer offers $35 and the Responder accepts, the Proposer gets $65 and the Responder gets $35. If the Responder rejects the offer, they both get nothing.

This is called a take-it-or-leave-it offer. It is the ultimatum in the game’s name. The Responder is faced with a choice: accept $35, or get nothing.

- economic rent

- A payment or other benefit received above and beyond what the individual would have received in his or her next best alternative (or reservation option). See also: reservation option.

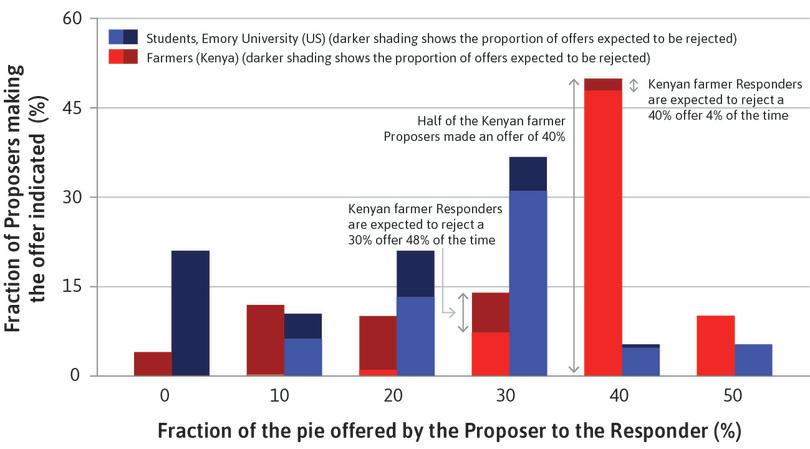

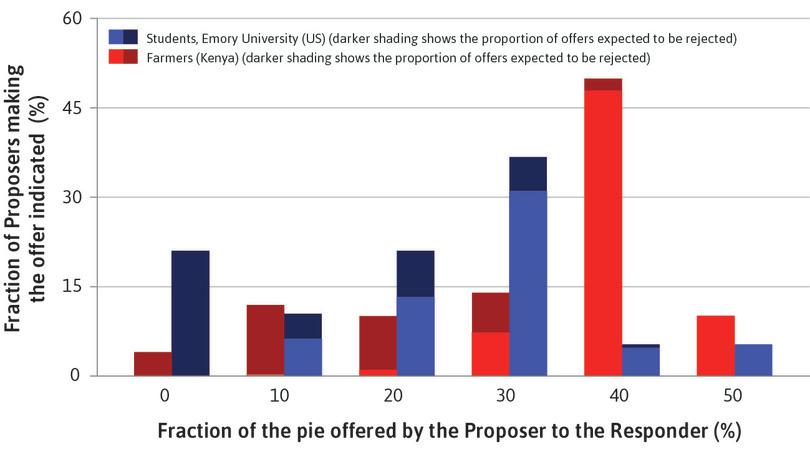

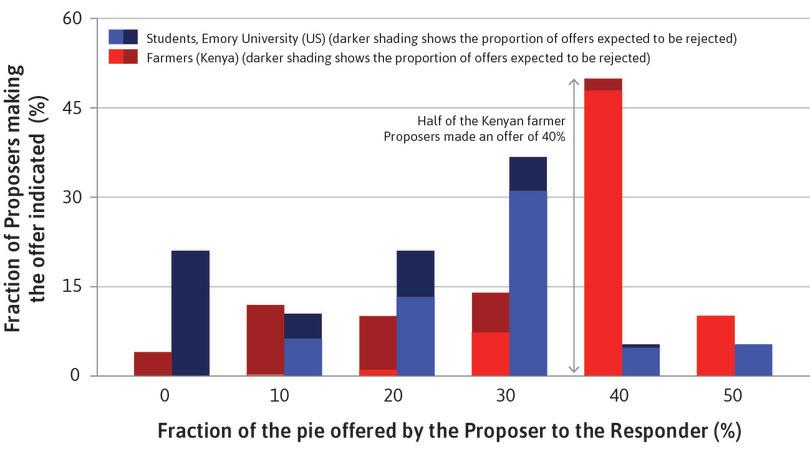

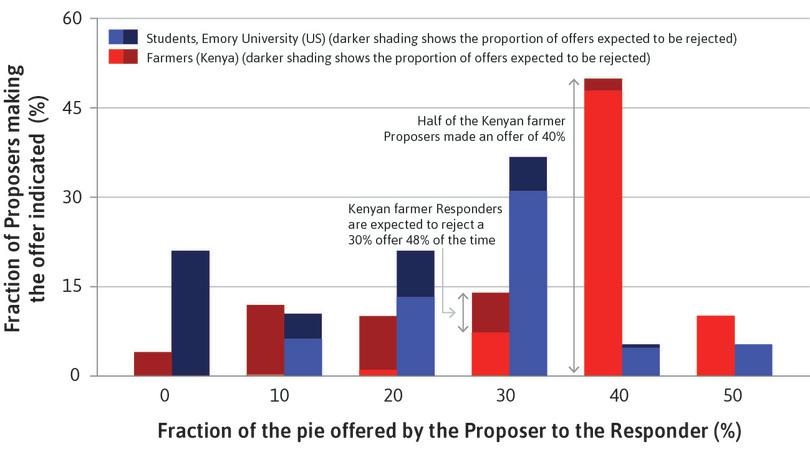

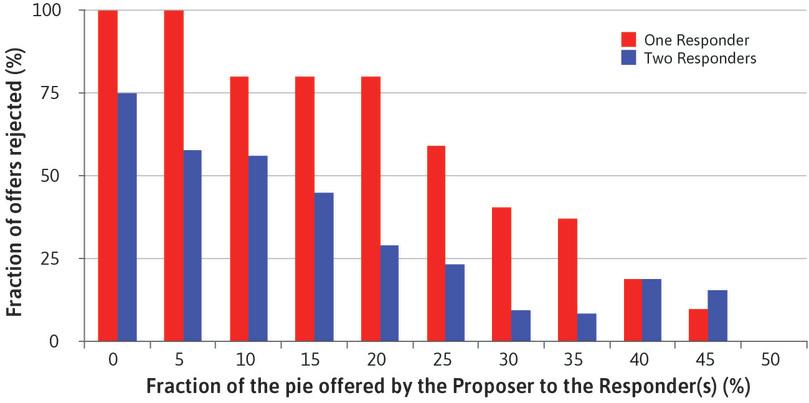

This is a game about sharing the economic rents that arise in an interaction. An entrepreneur wanting to introduce a new technology could share the rent—the higher profit than is available from the current technology—with employees if they cooperate in its introduction. Here, the rent arises because the experimenter provisionally gives the Proposer the pie to divide. If the negotiation succeeds (the Responder accepts), both players receive a rent (a slice of the pie); their next best alternative is to get nothing (the pie is thrown away).